Prizes and challenges

Thoughts on this year's Nobel Prizes, followed by a guest post by Henry Wilton

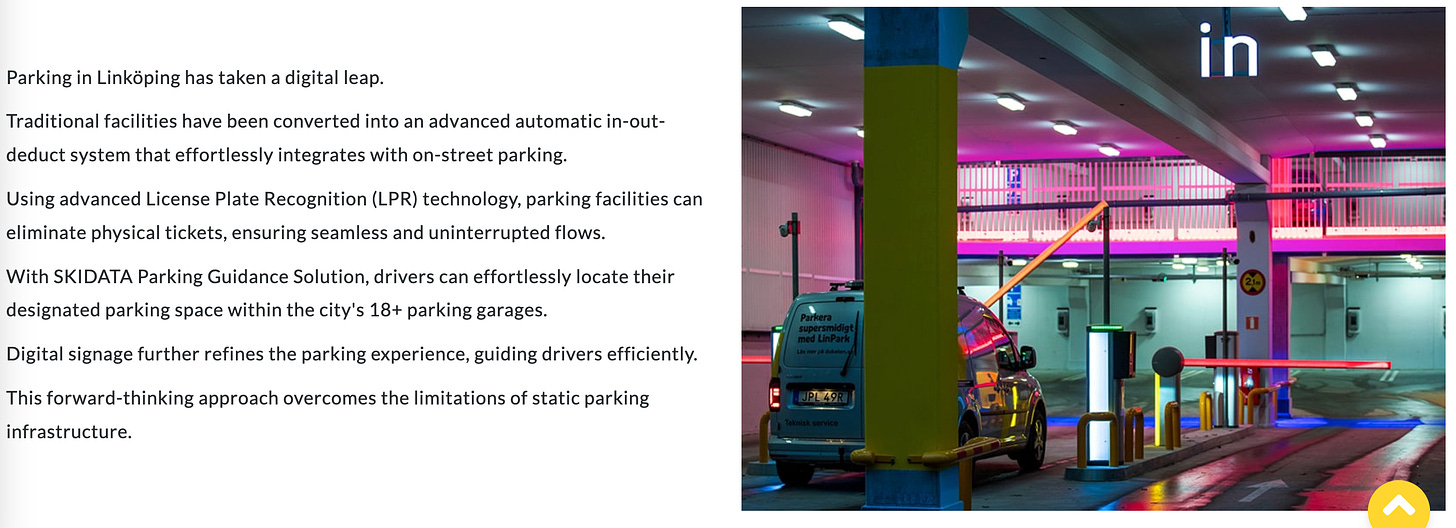

After AI researchers won back-to-back Nobel prizes in Physics and Chemistry, my colleagues were taking bets on how long AI’s winning streak would last, and who would be the lucky winners. ChatGPT was the obvious choice for the Literature prize, but who would win the Peace prize? I guessed it would be Smart Parking, as illustrated above, which, if implemented judiciously, could lead to a measurable reduction in mass shootings. Someone else thought that the future of Peace depends on controlling global consumption of alcohol, but “Research Suggests a Dark Side to AI Use in the Drinks Industry.”

Attention naturally turned to predicting when AI would win its first Fields Medal. The age limit of 40 would never be an issue; chances are the winner wouldn’t make it to the age of 4 without being superseded by an upgrade. For some reason I am tempted to guess that the winner will be named Alexa, but for consistency with previous contributions to this newsletter I will call them Klara 3.0.

Klara 3.0 previously joined the Silicon Reckoner crew in response to a challenge posed by my Paris colleague Jean-Michel Kantor:

Can a computer come up with the idea of the Poincaré conjecture?

Kantor proposed this challenge as a thought experiment that he expected to answer the bold-faced question in the negative. My own response to his challenge, to which I return below, did not prejudge the answer. But I’ve since come to believe that Kantor is setting the bar too high. At his talk in the Number Theory Web seminar a few weeks ago Jordan Ellenberg considered a much more down to earth question: can a neural net learn the Möbius function? This function of positive integers, denoted µ(n), is indispensable for analytic number theory. But its definition could hardly be simpler: µ(n) = 1 if n is a product of an even number of distinct prime factors, µ(n) = -1 if n is a product of an odd number of distinct prime numbers, and µ(n) = 0 if n is divisible by any perfect square greater than 1.

You might expect that by exposing a neural net to huge lists of integers n alongside the values of µ(n) it would learn the function, in the sense of correctly identifying the value of µ(m) more often than not for m not on the list, without being given the definition. Ellenberg reported that this indeed happened… but that when multiples of 4 were removed from the trials its success rate was no better than random guessing.

Somehow the neural net learned, without being told, that µ(n) = 0 when n is divisible by 4. And it’s not hard to guess how: whether or not a number is a multiple of 4 can be read off its last two digits, which is especially easy to pick out when it is represented in binary. It did not occur to the machine that the value of µ(n) had some relation to the prime factorization of n. This points to a challenge that seems to me no less pertinent than Kantor’s, but less dependent on the mystique of genius:

Can a computer exposed to tables of the Möbius function recognize, without being told, that its values have something to do with prime factorization?

It is widely assumed that there is no polynomial time algorithm for prime factorization, which means that training a neural net to identify the Möbius function of a binary integer is almost certainly pointless, probably even with a quantum computer. But I would be impressed by any student, human or mechanical, who upon being presented with a random list of (large) integers n along with µ(n) spontaneously thought to factor n in search of a pattern.

Before I return to the Poincaré conjecture, I remind readers that back in January, I reacted to the hype around AlphaGeometry by proposing the following challenge:

Give AlphaGeometry 2.0 the list of [Euclidean] axioms and let it generate its own constructions for as long as it takes. Then wait to see whether it rediscovers the Euler characteristic.

There may never be an AlphaGeometry 2.0, since DeepMind has since moved on to AlphaProof, which is busy collecting silver medals in the International Mathematical Olympiad, presumably in the hope that it will eventually evolve to the point of winning a Fields Medal, or at least meeting the 1958 challenge by Newell and Simon to “discover and prove an important new mathematical theorem” by 1968.

On the Poincaré conjecture

Back in November and December 2021 I devoted three essays, under the title “What is ‘human-level mathematical reasoning,’” to Kantor’s challenge.

Poincaré’s conjecture, formulated in 1904 and proved by Perelman just under 100 years later, asserts that a simply connected 3-manifold is necessarily homeomorphic to a 3-sphere. Kantor thought, and presumably still thinks, that it is impossible for a computer to arrive independently at the statement of this conjecture. Rather than take a definitive position on the question, I tried to imagine in the third essay — the one that introduces Klara 3.0 — what it would take for a machine — my telephone, for example, or the Smart Meter that measures my consumption of electricity — to surprise me one morning by formulating the Poincaré conjecture for 3-manifolds. Two years later, however, Henry Wilton, a geometric group theorist at Cambridge, pointed out that I relied on an inaccurate history of the Poincaré conjecture and had also introduced some dubious considerations from computational complexity. I corrected the errors in my post of December 2021 and promised to return to Kantor’s challenge. In November 2023 I kept my promise and asked Wilton whether he had any further objections.

Wilton took some time to reply, but he did send me a long message this past summer, and agreed to let me reproduce it here. Before I turn to Wilton’s text, I should remind readers where ChatGPT’s cartoon version of Klara 3.0’s spontaneous formulation of the Poincaré conjecture runs into trouble.

Panel 2: Klara 3.0 starts erasing and rearranging equations, trying to make sense of them. They look determined but puzzled.

In this connection, I wrote

Something in Klara 3.0’s circuits, like the blinders on a carriage horse, must … predispose them, upon contemplating the chalkboard, to do something, without the intervention of a conscious subject (human or otherwise), that is likely to lead to the generation of the kind of data relevant to the eventual formulation of the Poincaré Conjecture. Panel 2 is a picture of that something, but ChatGPT’s version looks too unstructured. Too many centuries of different kinds of mathematics are crowding that hypothetical chalkboard to be meaningfully rearranged into anything that bears on the geometry of 3-dimensional manifolds, or of any kind of manifold.

Wilton was satisfied with this revision, and if I am reading it correctly his message to that effect indicates that he agrees that the unsolved problem is in Panel 2. But now I can let Wilton speak for himself.

Henry Wilton’s thoughts on Kantor’s challenge

Overall, I’m very happy with your revised attempt. This time, I have no mathematical quibbles! “Wilton apparently believes the question is open” is a decent characterisation of my beliefs.

What’s more, I completely agree with your argument that, mathematics being a human endeavour, A“I”s1 will need to interact with humans in order to practice it. Indeed, I believe that, left to their own devices, A“I”s will not generate anything that admits any kind of human interpretation at all.

I have some evidence for this belief, viz. an interesting story that I heard from Martin Bridson, who I think heard it from Demis Hassabis, the founder of DeepMind.2 Here’s the story [as I remember it — any errors are my own].

When DeepMind created AlphaZero, one of the first games they trained it to play was the arcade game Space Invaders. So AlphaZero played a trillion games of Space Invaders, and learned to play the game essentially perfectly, just as it has also done with Chess, Go and many other games.

Next, DeepMind invented a new game as follows: they took Space Invaders, and applied a single random permutation to the pixels. So the new game was formally equivalent to Space Invaders, but looked like snow to a human eye. Amazingly, AlphaZero was already perfect at the new game: it needed no further training in order to play it flawlessly.

It is tempting to believe that, in order to play Space Invaders, an AI must invent for itself many of the concepts that a human invents: the concepts of aliens, and a ship, and laser beams, and blocks. I take this story as telling us that we should not fall into the trap of believing this! Evidently, AlphaZero invented none of those concepts, but rather found a way of playing Space Invaders that cannot be explained to a human. Likewise, I doubt that a mathematical A“I”, left to its own devices, would prove any theorems that a human could make sense of (even if the theorems it discovered were very sophisticated).

None of this invalidates Gamburd’s3 presumption that A“I”s will inevitably become excellent at the “game” of mathematics. But it does suggest that, if an A“I” ever proves the Riemann Hypothesis (say), we won’t understand the proof.

Incidentally, regarding the question of explosions of computational complexity, I have an interesting test case in mind. My children love to play a card game called “Magic the Gathering [MtG]”. Remarkably, MtG has been proved to be Turing complete, so formally MtG really is as difficult as mathematics. I wonder whether DeepMind, or some other A“I” company, have any plans to produce an MtG-playing A“I”? This would be a compelling demonstration that there is no computational obstruction to A“I”s doing mathematics.

Finally, I hope you don’t mind if I share my true feelings about A“I”s and mathematics. Unlike Gamburd, I’m not especially worried that A“I”s will become very good at pure mathematics any time soon. Perhaps they will; if, so our jobs will have been automated, and we will have no more right to complain than any of the many other professions that have suffered the same fate.

I am more worried that A“I”s will never become good at mathematics, but will become excellent at *bullshitting* about mathematics. After all, BSing is what large language models are best at. Society as a whole doesn’t care that much about what we do. If Google says that their A“I”s can replace pure mathematicians, I fear that society will believe them, whatever we say about it. And the trend for universities to close their maths departments will accelerate.

I am very pleased to announce that next spring I will be teaching a course entitled

Mathematics and the Humanities: Mathematics and Philosophy

at Columbia, with my colleague Justin Clarke-Doane from the philosophy department. Readers of this newsletter have already encountered Clarke-Doane on more than one occasion (especially here). A very tentative syllabus has been made public on my home page but will certainly be updated more than once between now and mid-January. I expect the course will generate interesting material for this newsletter; but I also hope that readers will enrich the course by providing frequent challenging comments.

[Note from MH:] Wilton writes A“I” rather than AI to indicate his skepticism about the “intelligence” claimed for machines.

[Note from MH:] Now he is Sir Demis Hassabis and, as of this year, he is a Nobel Laureate in Chemistry, though he has never been a chemist, thanks to DeepMind’s work on AlphaFold. And you should be asking: why did Hassabis, and not AlphaFold, receive the Nobel Prize? Nobel’s will stipulates that the prize is to be awarded

to the person who made the most important chemical discovery or improvement;

But that’s the English version; the original Swedish reads

en del den som har gjort den vigtigaste kemiska upptäck eller förbättring;

and although I don’t read Swedish it seems to me neither the Swedith word for “person” (which seems to be “person”) nor any of its cognates is present in Nobel’s will. Instead, it appears to be translated as

to the one who made the most important chemical discovery or improvement;

That “one” could just as easily be a machine, and no doubt soon will be.

[Note from MH:] This is a reference to one of Alexander Gamburd’s comments on this site.

PS. I find a number of things perpetrated by Facebook atrocious, but your association (however implicit and tenuous) of the Breakthrough Prize recipients with these atrocities I find no less problematic than association of the Nobel Prize recipients with the atrocities committed by using dynamite.

(1) "I am more worried that A“I”s will never become good at mathematics, but will become excellent at *bullshitting* about mathematics. "

(2) "What’s more, I completely agree with your argument that, mathematics being a human endeavour, A“I”s1 will need to interact with humans in order to practice it. Indeed, I believe that, left to their own devices, A“I”s will not generate anything that admits any kind of human interpretation at all."

I am not worried at all about (1). It is its overall comparative imperviousness to "bullshit", combined with being utterly indispensable as an instrument of comprehension/mastery/power that makes mathematics special in this respect.

Is Harris or Trump a more "democratic" choice to be the next US President? Apparently, at this juncture, the answer to this question is a coin-toss (H or T). There is only one way to find out: US elections -- a quintessentially "human endeavor" (cf. (2)).

But the proof of, say, the Riemann Hypothesis by, say, you or I or by A"I", upon being verified as valid by, say Aleph-Lean-4, will be accepted as such by you and me -- and all the humans at large -- unequivocally; and, in due course (and I trust before too long) understand the proof humans will.

Nevertheless I remain attached to the view expressed by Hermann Weyl: ‘We stand in mathematics precisely at that point of limitation and freedom which is the essence of man himself.’