News flash: new NSF funding for AI in mathematical reasoning

A neutral-sounding announcement that leaves questions unasked

The program solicitation

On March 6 I received a message from the address nsf-update@govdelivery.nsf.gov inviting me to visit the page headed by the image copied above. There I found the following

Synopsis

The Articial Intelligence, Formal Methods, and Mathematical Reasoning (AIMing) program seeks to support research at the interface of innovative computational and articial intelligence (AI) technologies and new strategies/technologies in mathematical reasoning to automate knowledge discovery. Mathematical reasoning is a central ability of human intelligence that plays an important role in knowledge discovery. In the last decades, both the mathematics and computer science communities have contributed to research in machine-assisted mathematical reasoning, encompassing conjecture, proof, and verication. This has been in the form of both formal methods and interactive theorem provers, as well as using techniques from articial intelligence. Recent technological advances have led to a surge of interest in machine-assisted mathematical reasoning from the mathematical sciences, formal methods, and AI communities. In turn, advances in this eld have potential impact on research in AI.

along with a link to a full 15-page solicitation.

Readers of Silicon Reckoner will find no surprises in the introduction to the new program. Being too lazy to paraphrase I quote verbatim:

…only recently research in this area has demonstrated real impact on the field of mathematics, assisting mathematicians in formulating conjectures, proofs, and counterexamples. Since then, the impact of AI in solving longstanding mathematical conjectures has been felt in fields ranging from representation theory to fluid dynamics. With data being produced at a scale far beyond what any human can study, the potential of AI to identify patterns in enormous amounts of data shows great potential for mathematics.

The second sentence strongly hints that the “real impact” in question refers to the handful of articles published in Nature and the like that were accompanied by splashy press releases and enthusiastic coverage in Quanta or the AAAS’s Eurekalert and the mainstream press, as well as the more sober kind of coverage readers are accustomed to find on Silicon Reckoner. The NSF call continues with a paragraph about formal verification which includes this sentence:

These ITPs, or proof assistant tools, have long demonstrated their impact on software and hardware verification and are beginning to show potential for building mathematical libraries and as verification tools for highly complex mathematics.

No surprises there, except that a month or so ago my Columbia colleague Christos Papadimitriou brought to my attention a 47-year-old paper by De Millo, Lipton, and Perlis on the relevance of formal software verification that remains pertinent and appears seriously to undermine the premise of this paragraph.

The solicitation continues by referring to last June’s workshop, sponsored by the National Academies, on AI to Assist Mathematical Reasoning, which

highlighted the growing potential and timeliness for AI, the formalization of mathematics, and synergies between these fields, to support rapid and eccentric exploration in mathematical discovery and to aid human understanding. It also emphasized that the centrality of reasoning, which modern AI still struggles with, to the mathematical process makes mathematics an especially interesting and important area to test the power of AI, opening the door to the elusive goal of introducing reasoning into AI systems.

Silicon Reckoner devoted no fewer than five long posts to this workshop (starting here) that “highlighted” what was missing from the three days of discussion. I graded sessions on five criteria: Context, Access, Externalities, Democracy, and Cluefulness.1 Sessions with the highest scores were those primarily involving computer scientists, most of whom maintain one foot, or at least a couple of toes, in what is politely called the real world. The mathematicians on the panel, whose concerns apparently lie elsewhere, were insensitive to the first four criteria and almost entirely clueless.

The NSF solicitation contains nothing to help grantees overcome their cluelessness, but this is hardly surprising in a document that emanates2 from the depths of a massive national bureaucracy. Still, it’s jarring to read this anodyne solicitation during a week in which the press has warned about AI’s “runaway thirst for water and energy” and about Silicon Valley’s “pricing academics out of AI research,” not to mention the “extinction-level threat to the human-species” “highlighted” in a report commissioned by the U.S. Department of State.

Money

The program will begin modestly.

We anticipate making 6-10 awards in each competition. Each award size may vary and is expected to range from $500k to $1.2M total for up to 3 years. … Up to $6,000,000 per year is expected for new awards, subject to availability of funds and receipt of meritorious proposals.

Given that the program is run jointly by the NSF’s Directorates for Mathematical Sciences (DMS) and Computer and Information Science and Engineering (CISE), I estimate that it will represent 1-2% of the DMS budget. This doesn’t seem like much, but my experience on an NSF panel convinced me that there are far more worthy proposals in more conventional areas than the number already being funded. Unless new funds are being set aside for the new program3 this means that someone higher up has decided that a few more conventional proposals will be sacrificed in favor of a program with AI in its title. This was not mentioned in the Program Solicitation, and I know at least one regular reader who would undoubtedly welcome such a shift of resources; but this consequence of the new emphasis on AI should be mentioned somewhere, and not only in Silicon Reckoner.

(Realists among readers will immediately object that no one knows whether the NSF will survive in its present form if Trump is reelected in November. Unlike climate science, mathematics will not suffer from a “woke” reputation,4 but one of Columbia’s doctoral students recently warned that researchers who submit proposals including words beginning with the prefixes homo- or trans- may risk being summoned to Congressional hearings. So we may soon hear ourselves talking about MAGAmorphisms or TRUMPitive relations, and we may even be grateful for the opportunity to do so.)

Even the most environmentally and ethically insensitive among us, and those who are convinced the human species has overstayed its welcome, should be alarmed by at least two revelations of last June’s National Academies workshop. The first has to do with the relative scarcity of training materials in mathematics. As I wrote at the time,

During his 15-minute presentation the final day, Alex Kontorovich pointed out that LLM’s like GPT or Bard are trained on 1 trillion lines of data, whereas formalized libraries have fewer than 10 million.

What this means is that, in order to generate enough lines of data to bring mathematical AI to the level of competence of ChatGPT, the research corpus will have to be submerged 100000:1 in the mathematical equivalent of this response by ChatGPT to the prompt “Write a sonnet about non-solvable base change without mentioning either an elephant or Godzilla”:

The Cryptic Dance of Base Change5

In realms of digits, where patterns entwine,

A base change quest, elusive design.

No trumpeting beast, no monstrous sprawl,

Yet in the numbers, enigmas enthrall.

Through mathematical realms, equations spin,

Non-solvable base change, a cryptic kin.

No pachyderm's grace, no giant's stride,

Yet transformations within digits abide.

In silence, whispers of numerals speak,

No elephant trumpets, no titans' peak.

Yet in the ciphered dance of subtle exchange,

Non-solvable mysteries, numbers arrange.

No colossal steps, no mythical roar,

Yet in the base change, secrets explore.

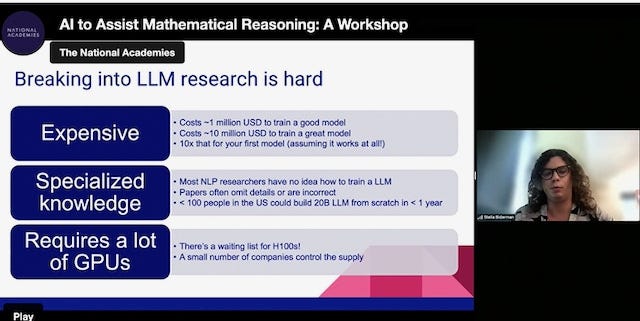

The other revelation came during Stella Biderman’s talk.

It’s not a coincidence that the Nature article to which I alluded above reports on a collaboration with DeepMind, or that Amaury Hayat’s response to my question about resources, at the end of his talk on AI and “the future of mathematics” acknowledged that his work involved training carried out “with Meta’s Evariste AI team.” It’s actually common knowledge, though perhaps not among mathematicians, that an AI “future of mathematics” will be heavily dependent on collaboration with the tech industry. As Biderman pointed out in connection with her slide entitled “concentration,” “the overwhelming majority of [academic] researchers have never trained a model that requires more than 4 GPUs in their life, whereas you need over 100, or 500-1000, to make a good model.”

Collaborating with bad actors like Facebook or Google may not be a problem for the ethically or environmentally insensitive. But the report the State Department commissioned features a “first-of-its-kind, government-wide Action Plan” including “previously unthinkable” recommendations. According to the Time article on the report, these recommendations include “mak[ing] it illegal … to train AI models using more than a certain level of computing power.”

The Time article is quick to acknowledge that congressional implementaion of such recommendations is highly unlikely in the supercharged atmosphere in which NSF decision makers have designed its new program. Still, we can ask what level would be required to develop the kind of mathematical reasoning envisioned by the new NSF program? And how many of us would be ready to contribute to the creation of an extinction-level threat in the pursuit of this objective?

Context: Attention to the deep motivations of governments and corporations.

Access: Access to necessary resources, exclusion, monopoly.

Externalities: Regulation, including climate and water considerations, personal data protection, and copyright infringement.

Democracy: Democratic decision-making, power relations.

Cluefulness: The opposite of cluelessness. Here it consists in acknowledging the lively ongoing conversations about AI in other disciplines.

Possibly with assistance from an in-house or commercial LLM?

I’ve been unable to obtain a precise answer to this question and I suspect that, as always, inclusion of the new program will have an influence, perhaps barely detectable, on the shape and scope of the budget Congress will eventually approve.

Though this may also change from one day to the next.

This was the second of two tries. When asked to “List all the places where you did not mention an elephant in the second sonnet,” ChatGPT replied

In the second sonnet, the references to an elephant were entirely omitted, and the poem does not mention an elephant at any point.

I'm curious as to whether you think the status of your "Central Dogma" has changed in the light of recent developments as is perhaps suggested by this NSF solicitation.

When asserting that "there are far more worthy proposals in more conventional areas than the number already being funded", is this primarily because the Central Dogma (say - an effective, wholesale formalization of mathematics) remains a chimera or is it because, say post-GenAI/Lean etc., such mechanization now seems inevitable but with the claimed downsides/risks? In other words, is this proposal less worthy than those in conventional areas because the Central Dogma is true or because it is false?

The picture of "the differential equation with less than 3 elephants" calls to mind Jim Gleick's lovely phrase in Chaos that one could describe mammals as "non-elephant animals".