Generosity

Is it ever nice to subvert, undermine, or sabotage?

You don’t own web3. The VCs do. It will never escape their incentives. It’s ultimately a centralized entity with a different label. (Jack Dorsey)1

“Sharing is caring. Privacy is theft”

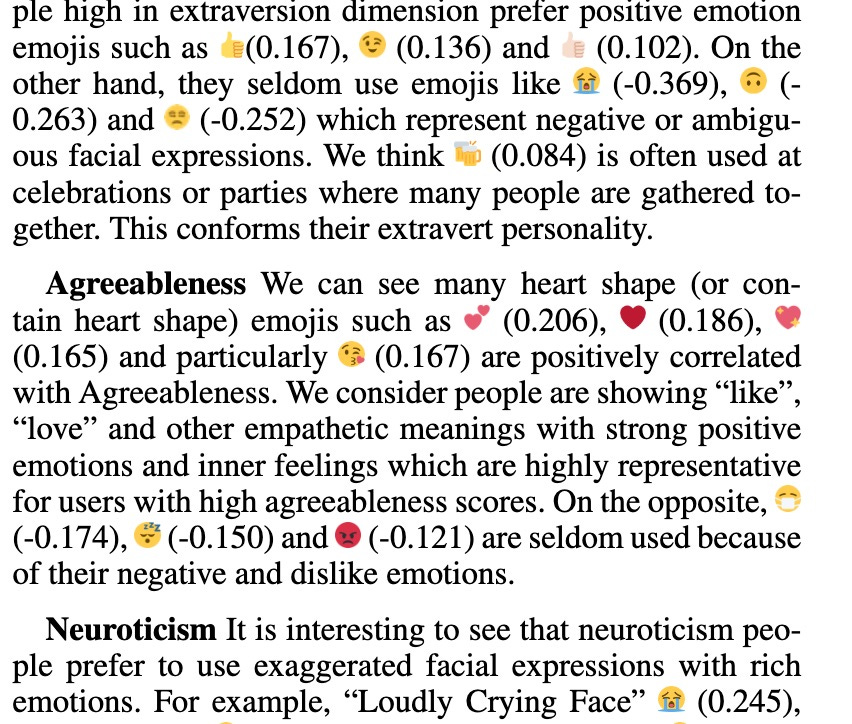

Someone who knows me well has claimed that my failure to use emojis in my e-mails proves that I lack emotional generosity. Quite a lot of published scientific research supports such claims, as witness the above excerpt from an article published in the Proceedings of the Twelfth International AAAI Conference on Web and Social Media.2

In my defense I could claim that my emotions are too complex to be conveyed by aligning a string of shrink-wrapped emotional nuggets. But that defense sounds too defensive; and anyway, can’t a sentence be described in the same way, as a string of words? If forced to reply, I might say that words are ambiguous and don’t keep still long enough to be pinned down by definitions, while emojis come helpfully packaged with explanatory labels. The more emojis you use, the easier it is for a robot to read your messages. AAAI stands for “Association for the Advancement of Artificial Intelligence.” Could it be that researchers wish to advance AI by mining the big data of social media posts as training sets for interaction with a variety of personality types?

Whether or not it is nice to be inscrutable in my daily interactions, I would like to resist the pressure to conform to the model of sociability implicit in the title of this section,3 even if that makes my behavior more difficult for an AGI to process.

People have become slaves of probabilities. Their ideal here, in Alphaville is a technocracy, like that of termites and ants. (Alphaville, Jean-Luc Godard)

Toward the end of the conference on Mathematics with a Human Face (reported here), David Mumford invited the audience to imagine a robot equipped with artificial intelligence, or rather an artificial intelligence capable of interacting with the world and conversing with people. I was sitting on the stage next to Mumford, and someone in the audience asked me how I would deal with such interactions. Without hesitating, I ventured that I would try to convince the robot to commit suicide.

Mumford found my answer surprising. But I was only taking a cue from the undercover agent Lemmy Caution (alias Ivan Johnson) who sabotages Alpha 60, the AGI that rules Alphaville, with the challenge to solve a paradox:

Something that never changes with the night or the day, as long as the past represents the future, towards which it will advance in a straight line, but which, at the end, has closed in on itself into a circle.

Am I the sociopath?

Godard’s precedent inspires my aspiration to foil the emergence of an AGI capable of interpreting my behavior. Does that make me the sociopath, and not the VCs? Do I have a “moral imperative” to spend massive amounts of time on social media, punctuating my texts with heartfelt emojis, in order to make my emotions an open book to be read and remixed by Silicon Valley servers?

But privacy has to be sacrificed for this “unbelievable abundance”:

SAM ALTMAN: … People want very different things out of GPT-4: different styles, different sets of assumptions. We’ll make all that possible, and then also the ability to have it use your own data. The ability to know about you, your email, your calendar, how you like appointments booked, connected to other outside data sources, all of that. Those will be some of the most important areas of improvement.

(from Unconfuse me with Bill Gates, January 11, 2024, my emphasis)

So should I sprinkle ☂️ my writings, including this one1️⃣, with paradoxical 💬 and pointless 🐘 🤾🏽 emojis😱, as a data ⍰ poisoning ℞ tool🧟, to throw 🤽🏿♀️AGI off the track 🛤️, like the artists 🪇 who use programs such as Nightshade to insert invisible 👀 pixels in their work 🛠️ to subvert attempts to use it as training 🚞 data, or the activists who place traffic cones 💈on self-driving cars🚘? And is that kind of sabotage 🧨 even feasible on Substack, a vehicle 🚜 for one of the most notorious of the VCs, Marc Andreessen, who confesses that he “doesn’t even know what my personal views are,” presumably because he has already crossed♱ to the other side 🔄 of the Singularity🦠?

Shouldn’t I instead sabotage the training of artificial mathematicians, in the spirit of a yet-to-be established AUAI (Association for the Undermining of Artificial Intelligence), by saturating cyberspace — starting with my own home page — with flawed, fallacious, deceptive, and just plain ridiculous simulacra of mathematical papers that would somehow be recognized as defective by human readers but that would steer autoformalization programs straight into a death spiral? As a group project, this could restore some playfulness to our profession.

Or perhaps the key steps in our papers could be hidden behind an activation code accessible only upon passing a Turing test?4

Generosity means: use plain English

Comment guidelines

Please keep comments respectful. Use plain English for our global readership and avoid using phrasing that could be misinterpreted as offensive. By commenting, you agree to abide by our community guidelines and these terms and conditions. We encourage you to report inappropriate comments.

(from the Financial Times)

What follows is going to sound petty and petulant as well as inappropriate, and could be misinterpreted as offensive. When sleepy Bonn was its capital, the Federal Republic of Germany chose to make the city its most important center of mathematical research. With the Max-Planck Institute and the Hausdorff Institute of Mathematics, as well as its university, Bonn is one of the premier centers of mathematical research; it regularly hosts dozens of visiting mathematicians from around the world.

Some may be surprised to learn that the city is also home to numerous Germans, who speak the national language when among themselves, but instantly and effortlessly switch to “plain English” when approached by a foreigner. When I asked a German colleague why they didn’t continue speaking German, the answer was that it would be considered ungenerous.

Paris is a much bigger city than Bonn and a much bigger mathematical center, probably home to the world’s largest concentration of mathematicians, though perhaps not for much longer. At any given time (except during the month of August) Paris hosts hundreds of visiting mathematicians, as well as numerous French mathematicians, who not only speak the national language among themselves but continue to do so, even at lunch in the presence of a hapless guest who doesn’t speak a word of French (except maybe “bonjour” and “merci”). This is the case although, like the Germans, French mathematicians are perfectly capable of speaking “plain English.”

Q: Are the German or the French mathematicians more generous?

When I first started taking advantage of one of the perks of being a mathematician and visiting Europe for the round of conferences, I spoke French and German equally well, or equally badly. Ten years later, I could hold my own in a French conversation, whereas I had already forgotten most of what I knew of German grammar. So from my perspective, the Germans were doing me no favors by speaking to me in English. But as I said, placing my interest over that of the community as a whole is both petty and petulant and proves yet again that I lack emotional generosity.

Q, however, was a trick question. If during the first years of my career my French progressed and my German stagnated or deteriorated, it was because my French colleagues, belying the national reputation for arrogance, showed extraordinary patience with my efforts to speak the language, a quality manifested on only one occasion by their German counterparts. So does Q have only one answer?

You will be generous: the choice will be made for you

In a submission to a UK government committee earlier this year, lawyers for OpenAI contended that “legally, copyright law does not forbid training.” OpenAI also stated in that submission that, without access to copyrighted works, its tools would cease to function. (Guardian, April 9, 2024)

I’m finally getting to the real topic of this week’s post: what must we hand over to the tech industry in order not to be accused of being ungenerous? In Boston Mumford argued that an AI needed to be an interactive robot in order to be competent in mathematics:

What do you expect from a program for which the “real world” is meaningless? It has no idea why math or even logic is significant.

The answer is simple: endow the program with senses and motors, train it to talk and move and let it wander the halls and chat with real people (connected electronically with a huge server of course). (from the slides of Mumford’s talk at Boston University in April)

To judge by the talks at last month’s sequel to the 2023 NAS workshop on Artificial Intelligence to Assist Mathematical Reasoning, the industry has a different plan. Albert Jiang’s AI chatbots are “incredibly interactive” but in no state to “wander the halls”; Christian Szegedy devoted his talk to formalization and the problem we have already seen of generating a formalized corpus of the size needed for effective training of AI models. I was tempted to ask whether he still planned to use the pixels of scanned mathematical texts as training data, but I chose instead to ask a copyright infringement question:

Do you believe published papers and articles on the arXiv are available as training data, including articles published under copyright that specify “no commercial use”?

Petros Koumoutsakos, the moderator, admitted that he could “not exactly parse” my question.5 He then guessed, nonsensically, that I was asking what was the “commercial value” of using things from the arXiv. Szegedy, however, understood the question perfectly, and replied as I expected: “even lawyers have differing opinions… that’s not my expertise.”

It’s telling that Szegedy refrained from arguing that the contents of mathematical papers consists of ideas, or knowledge, or something that would be generally recognized as belonging to the universal heritage of humanity, like the decimal system or the Pythagorean theorem. I have heard such arguments, even from mathematicians, with the implication that withholding the material from those best placed to make use of them “for the benefit of humanity,” allowing “their tools [to] cease to function,” would be ungenerous in the extreme. And it’s no secret who has those tools, as Signal’s president Meredith Whittaker reminds us:

Right now, there are only a handful of companies with the resources needed to create these large-scale AI models and deploy them at scale.6

Nevertheless, and despite what OpenAI lawyers claimed above, I have learned that some tech companies are concerned enough to begin offering compensation to some publishers in exchange for the license to stew and liquefy all their “mathy data.”7 The offer comes attached to strings that are so unbreakable that, even if I knew the details, the non-disclosure agreements guarantee that I wouldn’t be able to share them with you. A moment’s thought makes it clear that the point of such an offer, combined with the NDA, is to ensure that any payment for raiding the mathematical commons will be made on terms agreeable to the Magnificent Seven8 — reinforced by the awareness that if these terms are rejected it’s likely that the raid will continue anyway, uncompensated.

Big Brother, it can now be revealed, was an LLM

Haven’t you often wondered how Orwell’s Big Brother could be literally present in those ubiquitous telescreens? Or for that matter, how the God of the Old Testament could generate (often highly poetic) spoken language while keeping track of everyone and everything, to give Jonah 🐳 his marching orders (be more generous with your fellow humans in Nineveh) or speak to Moses from a Burning Bush 🔥 (be more generous with your fellow Hebrews) or call out Cain on his farm 🚜 (you should have been more generous with your brother)?

How much will it cost to generate a digital Big Brother, or Jehovah, or both at once? “[Sam] Altman has suggested,” write two Financial Times reporters, that “it could cost in the order of a trillion dollars to develop AGI, largely due to the infrastructure and data required to train more sophisticated models.”

While the company has generated an estimated $2 billion in revenue in 2023, it has yet to turn a profit.

It reportedly lost $540 million in developing ChatGPT-4, and in December 2022, Altman described the computing costs of running the GPT as “eye-watering.”9

Pretty generous, don’t you think? But the VCs whose “eye-watering” investments keep OpenAI afloat are singularly lacking in generosity: they aim to make rather than lose money. How do they plan to manage that?

If the company does achieve profitability, its deal with Microsoft means the tech firm will first recoup its investment from a 75% share of the profits and will subsequently receive a 49% share up to a profit of $92 billion.

OpenAI, like any platform operating according to the principle of profit, needs a reliable revenue source beyond Microsoft’s share. They could imitate Spotify and water down the product:

Once a tech platform has a critical mass of users, it can start squeezing content creators. Most people can’t afford to work for free, so they quit creating. How, then, does the tech platform continue to grow? Often by sending users to lower-quality content that is free or almost free to produce. For example, songs seemingly created by A.I. are apparently being uploaded to Spotify and recommended to listeners.10

Such will be the destiny of mathematics, and few will mourn, if Silicon Valley artificially inflates the existing corpus by five orders of magnitude (as predicted here). But how would humanity react to a third-rate Big Brother or an off-brand Jehovah?

Quoted in J. Taplin, The End of Reality, p. 177.

I chose this article from the many on offer because the high concentration of emojis adds color to my post.

Quoted from The Circle, by Dave Eggers.

…which, he added, “actually has a lot of votes” (on the Zoom chat).

Whittaker was quoted in an article on nbcnews.com entitled “A growing number of technologists think AI is giving Big Tech ‘inordinate’ power.” Others expressing the same opinion include Tim Berners-Lee, Wikipedia’s Jimmy Wales, and Project Liberty’s Frank McCourt.

And other kinds of published material.

This is presumably why the French online newspaper Mediapart has refrained from cashing Google’s checks for the use of their data:

une condition incontournable pour notre journal n’a pu être arrachée : la transparence sur le contrat signé et ses modalités d’application. Les clauses de confidentialité imposées par Google nous empêchent aujourd’hui de publiciser auprès de nos lectrices et de nos lecteurs non seulement la somme totale versée, mais aussi celle que Mediapart est en droit de percevoir.

Au regard du lien de confiance avec nos abonné·es, qui garantissent la quasi-totalité de nos revenus … il nous est apparu inconcevable d’encaisser le moindre centime, aussi légitime soit-il. … La rétribution ne retourne pour autant pas à l’envoyeur (Google) : elle reste en réserve dans l’organisme de gestion collective, en attendant que le voile sur les chiffres soit levé.

Nicole Willing, "How does OpenAI make money?” techopedia.com, April 11, 2024.