Don't panic!

Chatbot mathematics may drown in its own detritus

Will generative AI ever make a profit?

Gary Marcus has repeatedly argued that it will not — sometimes several times a week — and his September 7 Substack quotes recent news (some of it behind a paywall) that strongly suggests that his predictions were accurate. You don’t have to take his word for it; the headline of this article in L’Express translates as

Generative AI: How the Bubble May Burst

My French brother-in-law, who works in electronic publishing, has been sharing articles like that with me lately. Already last November he had read on forbes.fr that OpenAI spends $2.35 for every dollar of income; more recently he has been pointing out nervous quotes in the French media of the July MIT report that “uncovers a surprising result in that 95% of organizations [that have invested in generative AI] are getting zero return.” Whether or not his information is accurate, it’s the basis for his decision to develop a specialized AI in house. It should soon be online, and uses only 2 GPUs to start.

Should mathematicians be concerned that the industry on which some of us have been counting to bring about our imminent obsolescence is instead on the verge of a new AI winter, with some of the apparently most prominent corporations falling victim to the ensuing wave of Schumpeterian creative destruction? Such concerns seem to me misplaced. Even if investors continue to place their faith in the industry’s leaders to come up with a plan to achieve the promised returns, and even if these returns materialize, I wouldn’t worry that tech corporations will be devoting resources to building systems to “solve mathematics.” The industry just doesn’t care that much about mathematics!

More complex questions are raised by the attitude mathematicians should adopt toward the industrial version of the technology in the meantime. Most trade periodicals have expressed disappointment with the failure of GPT-5 to live up to OpenAI’s promises. This is one of the reasons there’s so much talk1 about the possible burst of a new tech bubble. Meanwhile, in the background, mathematicians have been quietly playing with new versions of ChatGPT, and some have been sharing their impressions on social media, or on the arXiv;2 others have reported them to me in private. The verdict, as I’ll report below, is mixed. I’ll return to this at the end of this post, and draw my own conclusions.

The industry will survive

But don’t weep for the industry. Spending on the defense budget — which I guess should now be called the war budget — is not constrained by considerations of cost effectiveness. So I’m not surprised to read, in a broadcast aired by KQED on September 3, that “Silicon Valley is fully embracing contracts and collaborations with the military.”

Each one of these contracts can be worth hundreds of millions of dollars. And once you are working with the US government, they’re pretty faithful as clients. So you’re looking at these contracts that are going to give you amazing revenue year after year.…

The question now isn’t whether the US is going to have autonomous weapons. It’s when will the US have autonomous weapons, and how quickly will companies like Google, or OpenAI, or Microsoft be able to use and pivot their AI technology to create these weapons.

Surveillance will be good for business, too.

Technology can introduce different levels of surveillance that the US government can then choose to use as it wants to, right? And so there’s questions of how much more of a surveillance state does the US become.

The broadcast centered around an interview with Sheera Frenkel, tech reporter with the New York Times, who concludes by asking

anytime a technology is introduced, I think there’s a rush to kind of embrace that new technology. And then often a little like a beat later, like some would say a moment too late, there’s the question of, is this good?

Are the mathematicians working on benchmarks for Google, or OpenAI, or Microsoft, asking this question?

Chatbots as research assistants

The title of this part of the post is suggested by section 4 of this recent article, to which we will turn later, by probabilists Charles-Philippe Diez, Luís da Maia, and Ivan Nourdin,3 as well as by the speculations from this article by Tim Gowers, one of the first to take seriously the prospect of a future interaction between human and artificial mathematicians. This post was inspired by my learning that Jacob Tsimerman had claimed that his interaction with chatbots had more than doubled his own productivity, and that he anticipated machine displacement of human mathematicians much sooner4 than by 2099, as Gowers had predicted in the article he published at the turn of the present century.

Well before he started conversing with chatbots, Tsimerman’s contributions to mathematics had earned him an invitation to give a plenary lecture at next summer’s International Congress of Mathematicians in Philadelphia.5 No one, it seemed to me, needed to be more productive than Tsimerman already was. So I was naturally curious to know how his interaction with high-speed mechanical pattern-matching had changed him. The short version of his answer had four parts:

What it does for me [Tsimerman wrote] is:

find references

provide proofs of annoying lemmas (correct or at least to the point where I can make it correct)

summarize fields I don’t know

generate examples at a complexity level that I can’t.

ChatGPT confidently offers solutions to my benchmark problems

The long version came two weeks later, in the form of an hour-long conversation. In the meantime my curiosity got the better of me, especially about item 2, but instead of subcontracting the proof of an annoying lemma I decided to test Tsimerman’s willingness to “bet even money” that AI will be “better than us at math… 2 years out,” and asked GPT-5 to solve my Benchmark Problems 4 and 5.

Benchmark Problem 4 is open-ended: it starts with a problem known to have many solutions—potentially infinitely many—6and asks for an optimal one. Without going into details here (perhaps I’ll elaborate in a post specifically directed at professional representation theorists), I can say that GPT-5’s first attempted answer was not only incorrect but precisely failed to address the reason I might have proposed this as an interesting problem, but it did provide an argument that the answer, incorrect though it was, might be optimal. After I pointed out the error, GPT-5 gave a plausible recipe that showed its training set contained the relevant literature.7 However, this time there was no attempt at explaining in what sense the proposed answer might be optimal, and the example it provided repeated the error of the first attempt.

Here to forestall objections I should make it clear that I am well aware that no one is claiming that ChatGPT, even in the version GPT-5, is capable of even moderately advanced mathematics, much less of solving open problems. I chose to work with ChatGPT because (a) Jacob Tsimerman needed nothing more sophisticated to double his productivity and (b) it’s free (in the well-understood sense that its users are the product; see below).

Benchmark Problem 5, unlike the previous problem, asks for a proof of a very precise general result in representation theory, with immediate applications to number theory. I have been thinking about this problem for well over 10 years, and have submitted it as a problem to specialists in analytic, geometric, and combinatorial approaches to representation theory, without any luck. So you can imagine my astonishment when, in less than 10 seconds GPT-5 produced a complete—and quite elaborate—solution to this problem, one that stretched over several pages and included a number of references to papers that any expert would recognize as relevant to the question.

GPT-5’s solution to problem 5 begins with a surprising claim. Readers will agree that the claim, which I include as a footnote,8 looks exactly like the kind of proof sketch a professional mathematician might produce. Starting with this claim, I could in fact see how to generate a complete proof myself. Moreover, the observation was sufficiently striking that someone—most likely one of the cited authors—would normally have pointed it out explicitly years ago. So I checked with Dragan Milicic, who not only confirmed that the claim was nonsensical but also provided me with an elegant one-page refutation of the claim that could serve as an introduction—for the benefit of human mathematicians—to the sophisticated theory that he helped to create more than 40 years ago.

Will the last generation of mathematicians be Mechanical Turks?

At this point I can return to the question I raised above: what attitude should mathematicians adopt toward the industrial version of this technology? The situation is tricky. Is it my responsibility to continue the exchange with GPT-5, to point out the error and to continue to point out subsequent errors until it prints an acknowledgment that the problem cannot be solved solely on the basis of the material it has pirated from the internet and the manipulations thereof of which it is capable? Or should I enthusiastically congratulate GPT-5 for its valuable contribution to representation theory, and thus to number theory, in the hope that the training data extracted from our interaction will help “subvert, undermine, or sabotage” Silicon Valley’s plans to “solve” mathematics?

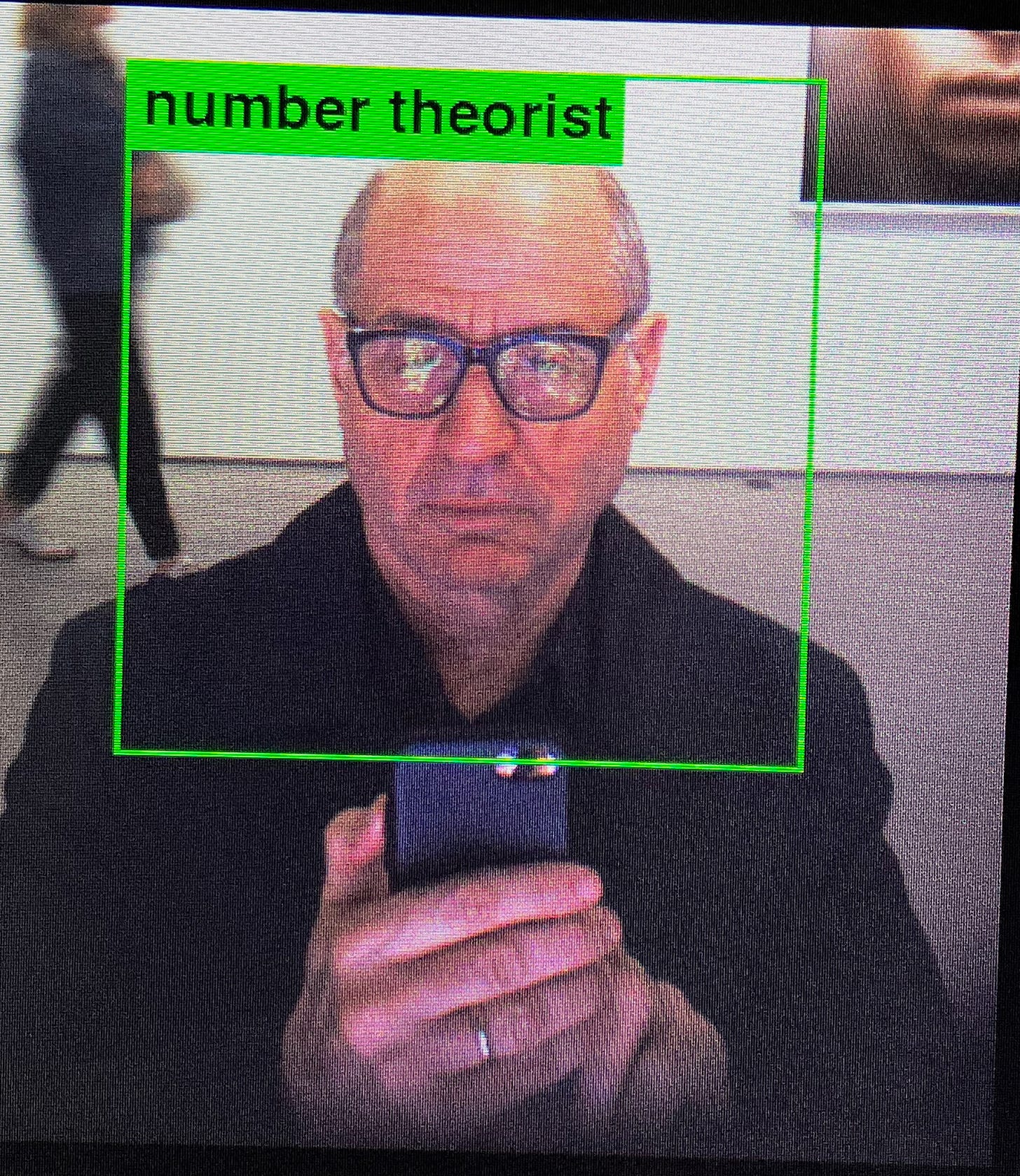

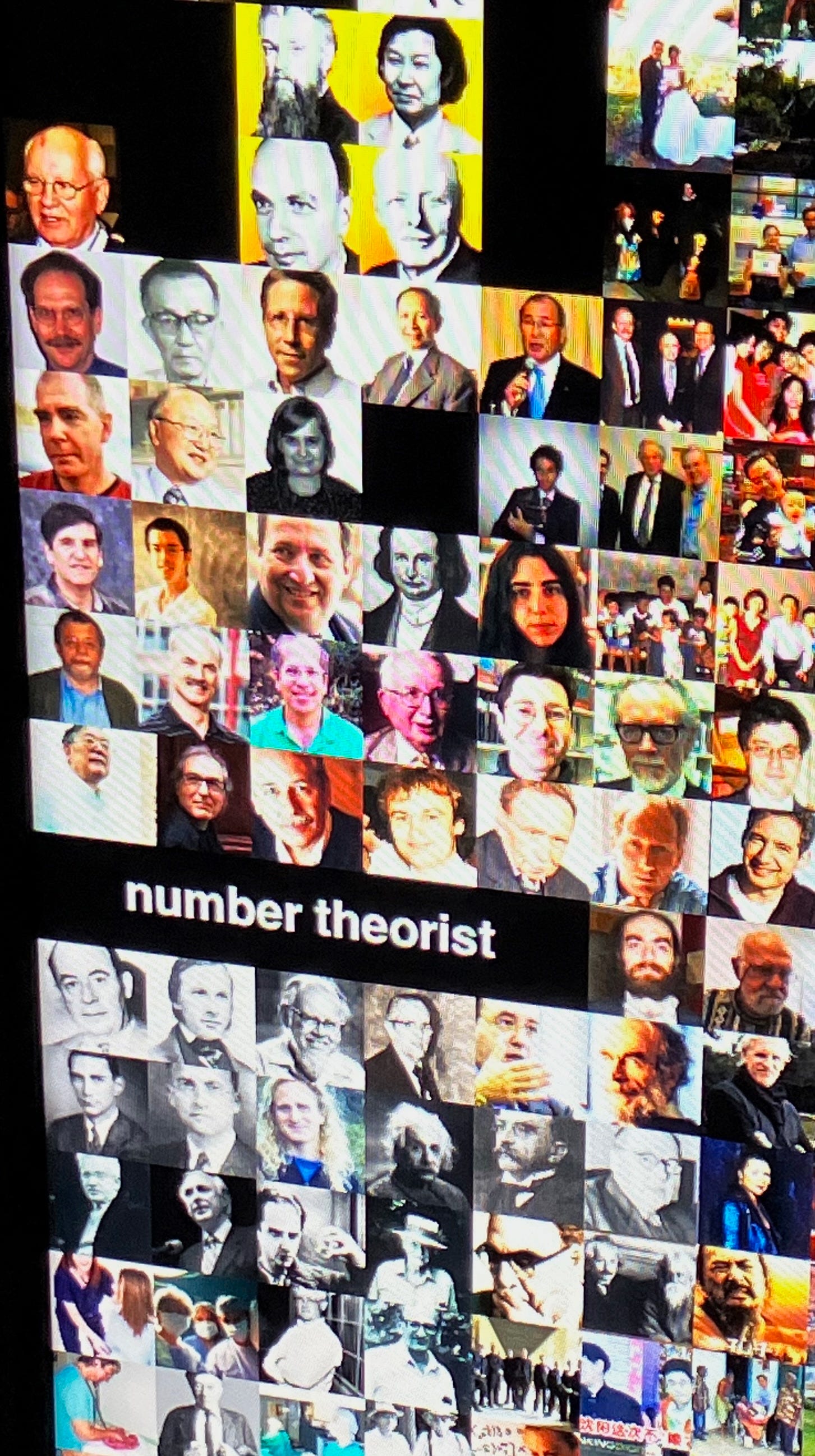

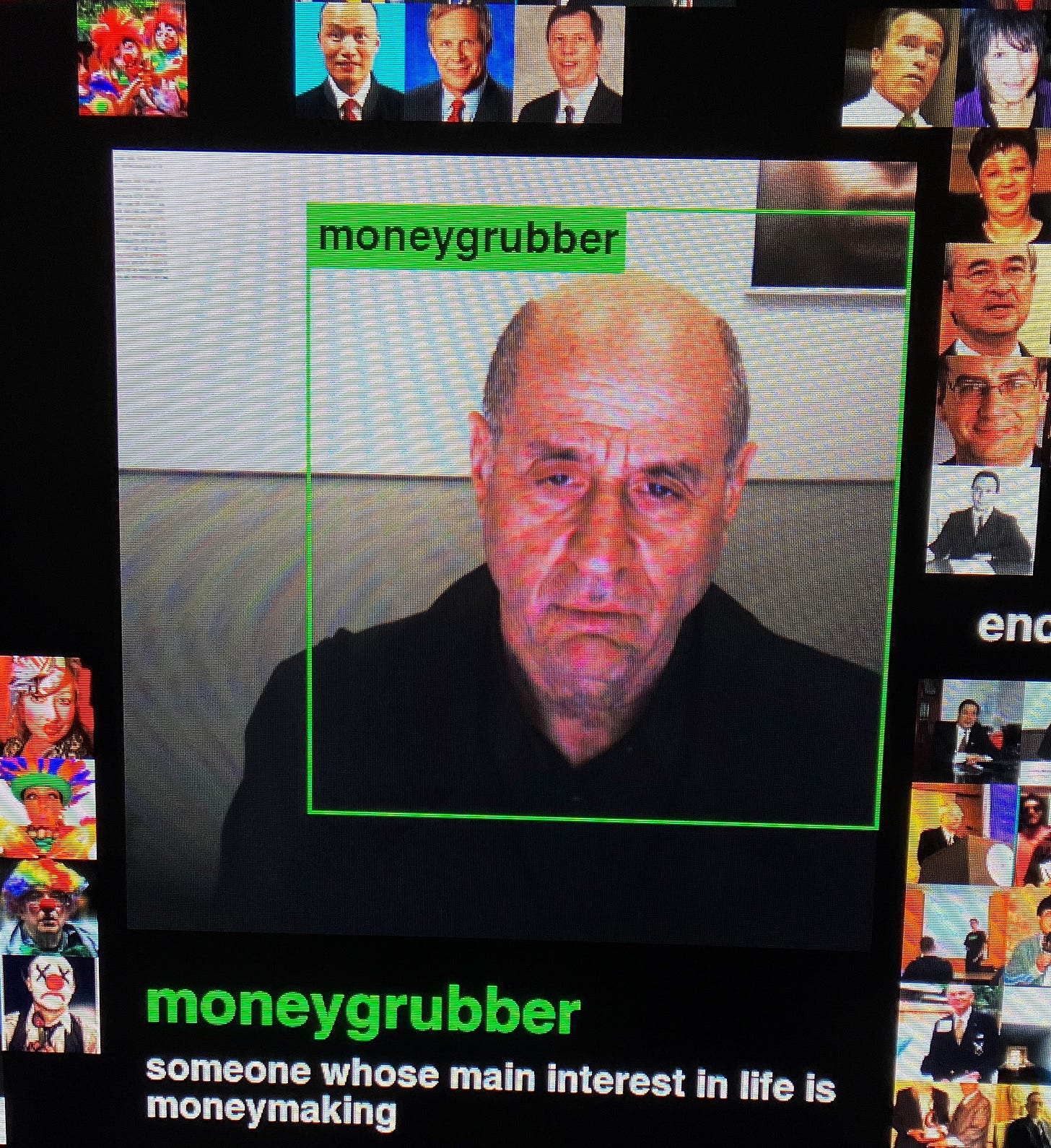

So far I have not reacted at all to the erroneous solution to Benchmark Problem 5, but readers can probably guess that my sympathies are—unequivocally—with the latter option. Some readers may find this attitude highly ungenerous, but I think it is the only legitimate choice—for those of us not prepared to surrender to progressive defeatism—when the alternative the industry offers is to serve as the functional equivalent for mathematical research of the Mechanical Turks9 who are responsible for the kind of labeling of images like those seen in the photos included with the present post.

Our generosity may not matter

Diez et al. took the other route when GPT-5 returned erroneous arguments in response to their prompts asking for a solution to an open problem in their branch of probability theory, but when the errors were pointed out it was able to correct them. The authors did not have the impression that this kind of interaction would increase their productivity:

The AI showed a genuine ability to follow guided reasoning, to recognize its mistakes when pointed out, to propose new research directions, and to never take on the task. However, this only seems to support incremental research, that is, producing new results that do not require genuinely new ideas but rather the ability to combine ideas coming from different sources. At first glance, this might appear useful for an exploratory phase, helping us save time. In practice, however, it was quite the opposite: we had to carefully verify everything produced by the AI and constantly guide it so that it could correct its mistakes.10

Their conclusion is that generative AI carries the risk of “saturat[ing] the scientific landscape with technically correct but only moderately interesting contributions, making it harder for truly original work to stand out.”

The situation is reminiscent of other cultural domains already transformed by mass generative technologies: a flood of technically competent but uninspired outputs that dilutes attention and raises the noise level.

We also foresee a second, more specific negative effect, concerning PhD students. Traditionally, when PhD students begin their dissertation, they are given a problem that is accessible but rich enough to help them become familiar with the tools, develop intuition, and learn to recognize what works and what does not. They typically read several papers, explore how a theory could be adapted, make mistakes, and eventually find their own path. This process, with all its difficulties, is part of what makes them independent researchers. If students rely too heavily on AI systems that can immediately generate technically correct but shallow arguments, they may lose essential opportunities to develop these fundamental skills. The danger is not only a loss of originality, but also a weakening of the very process of becoming a mathematician.

At the January JMM panel on mathematical publishing representatives speaking for Springer and for arXiv warned that the mathematical “landscape” is at immediate risk, as far as mathematical publishing is concerned, of the massive arrival of AI generated papers that may or may not be technically correct but that are already saturating the attention of publishers and those mathematicians willing to take the time to referee them. In this connection the dilemma I outlined above may be moot: whether or not I choose to take my assigned slot as Mechanical Turk and participate in training generative mathematics models by correcting their errors, enough authors are choosing to expand the corpus with synthetic data that its spontaneous heat death cannot be ruled out.11

You will not be charged, whatever this image from the Trevor Paglen installation may lead you to believe.

"…the market’s inclination to view news negatively that it might have shrugged off or even interpreted positively just a few months back is perhaps one of the surest signs that we may be close to the bubble popping.” Jeremy Kahn, Eye on AI, September 14, 2025.

See below.

Thanks to Ernie Davis for pointing it out to me.

Tsimerman also recently coauthored a short article whose title promises “A taxonomy of omnicidal futures.” Highly recommended!

Or wherever it ends up being held if the Trump administration’s visa policy doesn’t discourage international attendance.

Apparently heavy reliance on the em-dash is supposedly a telltale sign that a text has been composed by AI. I have vivid memories of growing up in Philadelphia, as a human child, and I believe my overuse of the em-dash began much later, possibly after reading several hundred pages of certain postmodern novelists; but the memories and the belief may have been implanted.

For specialists: the answer involved Blattner’s theorem on K-types of discrete series. The answer was a natural first guess but the system did not show its guess was well defined.

Somewhat better paid, though; this site claims mathematicians were paid $300-$1000 per problem, while Mechanical Turks can be paid as little as $0.01 per assignment. Several items at the exhibition Le monde selon IA at Jeu de Paume specifically aimed to reveal the living and working conditions of the millions of “click-workers” in the global south whose poorly-paid work allows the tech industry to promote the illusion that AI is evolving according to its inner logic. I particularly recommend Hito Steyerl’s Mechanical Kurd video, largely filmed in a Kurdish refugee camp on the outskirts of Erbil in Iraq.

This and subsequent quotations from Diez et al, https://arxiv.org/abs/2509.03065v1.

See also this essay by Trinity College Oxford undergraduate Sahil Grover. I found these links by typing “heat death” and “generative AI,” relying on the time-tested principle that whenever I have an idea, someone else has had it already. Interestingly, Grover was apparently not aware of the paper by Marchi et al. to which I linked above.

[quote] More complex questions are raised by the attitude mathematicians should adopt toward the industrial version of the technology in the meantime. [unquote]

Apropos 'the attitude mathematicians should adopt toward the industrial version of the technology in the meantime', I would venture to suggest that they should urgently seek open-ended individual perspectives on:

(a) How AI can be seamlessly co-opted into their future research since the first-order arithmetic PA is categorical (see [An16], Theorem 7.1):

Provability Theorem for PA: A PA formula [F(x)] is PA-provable if, and only if, [F(x)] is algorithmically computable as always true in N.

(b) How they would economically justify research funding into seeking proofs of theorems whose veridicality for a human intelligence cannot, even in principle, be validated by an AI if no set theory can be a conservative extension of PA (see [An25]).

Kind regards,

Bhupinder Singh Anand

[An16] The truth assignments that differentiate human reasoning from mechanistic reasoning: The evidence-based argument for Lucas’ Gödelian thesis. In Cognitive Systems Research. Volume 40, December 2016, 35-45.

https://www.dropbox.com/s/lrt2xq0wlzo3wxx/s

[An25] The Impending Crisis in Mathematics: The Holy Grail of Mathematics Is Arithmetical Truth, Not Set-Theoretical Proof. Preprint.

https://www.dropbox.com/scl/fi/uk8nscl18s5y034hwin1k/