My benchmark: the full list

It used to be somewhat more obvious that the ability to think was the mark of the human animal, not a tedious backstage task but the entire substance of our tragicomic show.

(Vinson Cunningham, The New Yorker, July 12, 2025)

Time is running out!

Christian Szegedy’s predicted date for the emergence of “super-human mathematician AI” is now less than a year away. Readers will remember that Szegedy had defined a super-human mathematician as one that solves

10% of problems from a preselected 100 open human conjectures … completely autonomously.

I, for one, want to be able to celebrate on that long-awaited day when Szegedy’s prophecy comes true,1 and therefore consider it urgent to participate in the preselection, before my personal favorites get crushed in the inevitable stampede. Herewith, therefore, I present my list of 10 personally preselected open and unquestionably human conjectures, from areas reflecting my interest at the border2 between number theory and representation theory, along with a few bonus items.

And I encourage readers to submit their own lists. If only nine of you3 provide lists of 10 problems each, Szegedy — no longer of Google nor of xAI but now Chief Scientist of Morph Labs and leader of Verified Superintelligence — will be able to use the 100 problems we have contributed to win his bet with François Chollet. And unlike the mathematicians who accepted Epoch AI’s offer of $300 - $1000 per accepted problem, I am offering these problems strictly gratis.

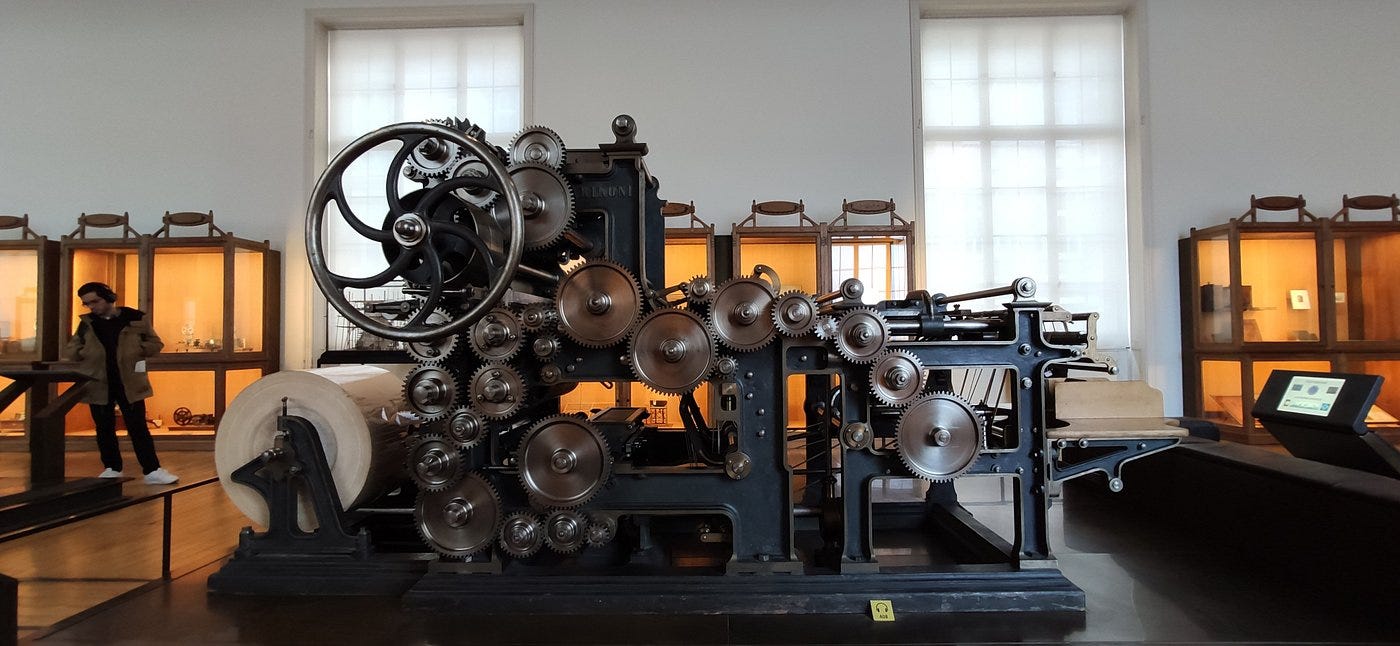

The following list of 10 problems, the solution to any one of which I would sincerely welcome, will unfortunately be incomprehensible to all but a handful of human readers. But don’t waste your time trying to understand them! Even if you are a mathematician — even if you are a specialist in one of the fields represented in this list — in less than a year the Verified Superintelligence will have reassigned you to a cabinet of superannuated curiosities, where you will spend the rest of your career in a display case labeled something like “merely human mathematician,” alongside specimens like this one:

The benchmark problems

They are presented in the order in which they occurred to me. Readers are referred to this earlier post for details on the first two.

Express the distribution character of a supercuspidal representation π of GL(n,K), where K is a p-adic field — or equivalently, of the representation JL(π) of the multiplicative group J of a division algebra associated to π by the Jacquet-Langlands correspondence, in terms of numerical invariants of the n-dimensional representation of the Galois (or Weil) group of K attached to π by the local Langlands correspondence.

In problem 1, assume n is a composite number. Find a simple characterization of those irreducible representations R of the group J of Problem 1 that are equivalent to representations of the form JL(π) with π a supercuspidal representation of GL(n,K).

Find a cohomological construction of Shalika germs of orbital integrals that is valid for all reductive groups over local fields of arbitrary characteristic.

Let (G,G') be one of the following two pairs of a Lie group and a closed subgroup: either G = SO(2,n) and G' = SO(2,n-1) or G = U(p,q) and G' = U(p-1,q). Let π and π' be discrete series representations of G and G', respectively, such that

\(Hom_{G^\prime}(\pi,\pi^\prime) \neq 0\)In that case the (known) Gan-Gross-Prasad conjecture asserts that this space of homomorphisms is one-dimensional; let f be any non-zero such homomorphism. Let K and K' be maximal compact subgroups of G and G', respectively, with K' contained in K. Let τ' be the minimal K'-type of π'. Find an optimal K-type τ of π such that f(τ) contains τ'. Here assigning a precise meaning to the word optimal is part of the problem.

In problem 4, we now assume G and G' have compatible rational structures over Q, such that K, K', τ, and τ' have compatible rational structures over some finite extension E of Q. We also assume to simplify the statement that τ is the minimal K-type of π. Let φ and φ' be matrix coefficients π and π' of type τ and τ', respectively, as determined by Flensted-Jensen’s formula. Prove that the integral

\(\int_{G^\prime} \phi(g^\prime)\phi^\prime(g')dg^\prime\)is a rational multiple of a specific power of π (= 3.1415…), when the volume dg' is given by a differential form of top degree that is rational with respect to the given Q-rational structure of G'.

Compute the integral in problem 5 exactly.

Assuming the invariant twisted trace formula over function fields, prove its stabilization.4

Extend the formalism of Shimura varieties developed by Jack Sempliner and Richard Taylor to toroidal compactifications and automorphic vector bundles.

Prove the Tate Conjecture — any l-adic cohomology class invariant under the Galois group is the class defined by an algebraic cycle (after the appropriate Tate twist) for any product of two Shimura varieties attached to an absolutely simple Lie group of type A, each of them of dimension at least 6. The result has to be proved in all levels; a solution for two fixed Shimura varieties that happen to be rational varieties doesn’t count.

Prove the Hodge Conjecture.5

Approaching the pinnacle of humanity

If difficulty is the goal, why not use unsolved problems?

We did consider that and we are still considering that.… FrontierMath does have a rather separate goal: to get a better understanding of AI's progress towards reaching human level. This requires problems that have actually been solved by humans, for us to have some sense of what the difficulty is.…So the advantages [to using solved problems] are that we do know the answers and we know where the problems stack up on the line from high school math olympian to professional researcher. And we can use this to benchmark how close AI is to the pinnacle of humanity.

(Greg Burnham, interview with Elliot Glazer, published March 11, 2025)

As a bonus I include a question that came up during a conversation last month in Cambridge with Jack Thorne. I don’t know whether it qualifies as a problem. It may be obviously false, or obviously nonsensical. It may be undecidable for trivial reasons. It has certainly not been solved, but after you read it you will not know whether or not it has been formulated.

In order to state the question, I need to review the notion of periods, as defined in the paper by Maxim Kontsevich and Don Zagier by that name. It was published in 2001 in the volume edited by Björn Enquist and Wilfried Schmid entitled Mathematics Unlimited : 2001 and Beyond that was meant to take stock of the state of mathematics at the beginning of the new millenium. It is one of those rare papers that for the experienced mathematician provides a satisfying synthesis that in retrospect feels inevitable, but that also can and should be read by every high school student who even temporarily experiences the throes of a genuine and abiding interest in mathematics. For that reason, those who believe they are capturing something meaningful about mathematics with their $300-1000 a pop benchmark questions are encouraged to meditate at length on the Kontsevich-Zagier paper.

A period is, in the first place, a real or complex number, and while the status of real numbers remains controversial, even among experienced mathematicians — even among number theorists6 — the ways we have of thinking about them enjoy consensus. And it’s no exaggeration to say that the Kontsevich-Zagier paper introduced a new way of thinking about real (and complex) numbers that, once seen, can no longer be forgotten. Roughly, then, a period in the Kontsevich-Zagier sense is (to paraphrase their definition)

a complex number whose real and imaginary parts are given by the (absolutely convergent) integrals of differential forms whose coefficients are ratios of polynomials with rational coefficients, over domains in (real) euclidean space whose boundaries are defined by polynomials with rational coefficients.

The sums and products of periods are also periods, and thus the periods form a ring, to which they add the reciprocal of π (= 3.1415…). Because the definition implies that each period is defined by a finite amount of information, the ring of periods is a countable subring of the field C of complex numbers. Because C is uncountable, essentially every complex number, as usually understood by mathematicians, cannot be given by a finite amount of information, so periods are very special complex numbers.7

Periods thus defined play an important role in some fundamental conjectures in algebraic geometry and number theory. They arise in the statement of the Birch-Swinnerton-Dyer Conjecture, and are implicit in the statement of the Hodge Conjecture, just to cite two of the Millenium Prize Problems mentioned previously. They include many other familiar numbers — not only irrational algebraic numbers but also π and logarithms of integers. The integral in problem 5 above is manifestly a period. But not every number that can be defined by a finite amount of information is a period. For example, Kontsevich and Zagier state on p. 5 of their article that the number e = 2.718… is (conjecturally) not in their ring of periods. But this can be remedied: Kontsevich and Zagier mention that Bloch and Esnault had already introduced a family of exponential periods which includes e as a member, and more recently Javier Fresan has updated their original article with an extended treatment that includes all the complex numbers that arise through natural cohomological constructions.8

All of the periods mentioned thus far have finite descriptions, and therefore form a countable set. There are still other interesting numbers, however, that have finite descriptions but that have no obvious connection to cohomology. These include the zeroes of the Riemann zeta function (and other Dirichlet series of arithmetic interest), the eigenvalues of the discrete spectrum of the appropriately normalized Laplacian on a locally symmetric manifold (including the eigenvalues of classical Maass forms), and the Feigenbaum constants in bifurcation theory. Rather than speculate on their relation to the periods of Kontsevich and Zagier, I pose the following

Bonus problem: Is there a finite extension to the Kontsevich-Zagier definition of periods, including the exponential periods, with the property that every complex number that admits a finite description belongs to the corresponding extended ring of periods?

(“How Do You Teach Computer Science in the A.I. Era?” New York Times, June 30, 2025)

Nurturing informed skepticism [said Mary Lou Maher, a computer scientist and a director of the Computing Research Association] should be a goal.

and he wins his bet with François Chollet.

How many such borders will the future conquistadores of artificial mathematics be crossing?

From nine different fields! As Szegedy tweeted elsewhere, his “clear and ambitious definition” of a “super-human” AI mathematician is an “agent that solves prove [sic] 10 previously unsolved, consequential conjectures from different domains” [my emphasis]. Chollet agreed that “we can work with that” and even Microsoft CEO (and billionaire, if only barely) Satya Nadella chimed in with a comment.

I’m particularly hoping to obtain lists from the following areas: Mathematical logic, analytic number theory, probability, algebraic geometry, algebraic topology, symplectic topology, operator algebras, dynamical systems, partial differential equations.

See my article in the Mitteilungen der Deutschen Mathematiker-Vereinigung or, if you don’t happen to have a subscription, see the original post on this newsletter. I like this problem because the solution will require something on the order of 2000 pages. How many days could all the homes in San Francisco be powered with the amount of electricity consumed in order to solve such a problem? I also like the problem because one of my current projects, with Raphaël Beuzart-Plessis and Jack Thorne, is conditional upon its solution; therefore, if Szegedy’s system doesn’t choose to solve this problem by this time next year I will be motivated to look elsewhere.

This one, which is of course one of the Clay Mathematics Institute’s Millenium Prize Problems, is unlikely to be solved. Its juxtaposition with problem 9, which is similar to problems that have been the subject of highly influential papers, is a reminder that it can be treated as a single conjecture or as infinitely many separate conjectures. To my mind this is a sign that Szegedy’s “clear and ambitious definition” is seriously defective.

But it also raises the question of what to do with the million dollar prize if Szegedy’s system were to prove the Hodge Conjecture. Would it go automatically to Szegedy’s team? $1000000 isn’t much by Silicon Valley standards, but would probably make a detectable difference in their short term plans. On the other hand, if the system has to attain consciousness in order to prove the Hodge Conjecture, maybe it would have its own ideas about how to spend the prize money.

See the opening paragraphs of this book review, which report an incident that took place more than 20 years ago; the situation has not changed substantially since then.

You may wonder in that case what is the point of having so many real numbers. Perplexity.ai called that “a great question” and offers the following response, which may or may not satisfy you:

The point of having uncountably many real numbers is not that we can describe them all, but that their existence gives mathematics the rich structure and completeness needed for analysis, geometry, and modeling the continuum.

I thank Aravind Asok for pointing out that a draft of the book with Peter Jossen marked as “in preparation” in Fresan’s exposition is actually available online, if you know where to look.

ICYMI, article about how saying that AI "passes" certain knowledge tests is based on a misunderstanding of what an LLM model is actually doing.

' Potemkin Understanding in Large Language Models

Marina Mancoridis, Bec Weeks, Keyon Vafa, Sendhil Mullainathan

Large language models (LLMs) are regularly evaluated using benchmark datasets. But what justifies making inferences about an LLM's capabilities based on its answers to a curated set of questions? This paper first introduces a formal framework to address this question. The key is to note that the benchmarks used to test LLMs -- such as AP exams -- are also those used to test people. However, this raises an implication: these benchmarks are only valid tests if LLMs misunderstand concepts in ways that mirror human misunderstandings. Otherwise, success on benchmarks only demonstrates potemkin understanding: the illusion of understanding driven by answers irreconcilable with how any human would interpret a concept. We present two procedures for quantifying the existence of potemkins: one using a specially designed benchmark in three domains, the other using a general procedure that provides a lower-bound on their prevalence. We find that potemkins are ubiquitous across models, tasks, and domains. We also find that these failures reflect not just incorrect understanding, but deeper internal incoherence in concept representations. '

https://arxiv.org/abs/2506.21521

To quote Flan O'Brian in The Third Policeman: "what you say must surely be the handiwork of wisdom, for not one word of it do I understand." Your list reads like the purest form of poetry. To know humans have reached this point (these points) is deeply thrilling.