Overhearing (mainly) computer scientists talking to each other, continued

(My) shallow thoughts about deep learning, III: a quick review of the remaining sessions of The Workshop.

This is the continuation of a post by the same name.

The panel discussion

Day 2, The Role of Intuition and Mathematical Practice

was only tangentially related to the themes of this newsletter but was remarkable for the participation of two exceptionally distinguished panelists. Oxford computer scientist Ursula Martin — a Commander of the Order of the British Empire — spent an hour, moderated by Harvard’s Petros Koumoutsakos, opposite cognitive neuroscientist Stanislas Dehaene, professor at the Collège de France, the summit of French academia.

Martin entitled her presentation “How does mathematics happen and what are the lessons for AI+MATH?” I was gratified to see that she began to answer her question by referring to “ethnographers and social scientists who … think about what mathematicians are doing,” going so far as to quote a classic article by M. Barany and D. MacKenzie:

We call attention to the vast labor of decoding, translating, and transmaterializing official texts without which advanced mathematics could not proceed. … There are thus two fundamentally different kinds of mathematical texts. There are papers and reports akin to journal articles in the natural sciences, but there are also tentative, transitory marks that try to produce new orders out of old ones (with a crucial stage of disorder in between). Blackboards, we have suggested, are the iconic site of this second sort of text-making.

Unlike most of the speakers, Martin approached mathematics not as a finished product but as “potential mathematics knowledge, …that vast sea of possible conjectures and theorems”; “accredited” mathematical knowledge (published papers or otherwise); and “all the infrastructures”: people, the "workforce", employers, publishers, funding bodies. Her ongoing joint work with Alison Pease1 and others has looked at collaborative projects like MathOverflow and PolyMath; she asked how “weasel words” like “analogous” or “similarly” make math difficult to read, and she insisted that a paper “needs to speak to some kind of mathematical community.”

As if paying closer attention to what we actually do than most mathematicians were not reason enough to appreciate Martin, here are two more reasons: her energetic scolding of Koumoutsakos for interrupting her; and the title of her last slide:

Martin’s slide shows not only that she is fluent in the pseudo-language of ecosystems and stakeholders2 and that she appreciated (as did I) Jeremy Avigad’s quoting Thoreau at last October’s Fields Medal Symposium. It also reminds us that her talk is addressed to the topic of The Workshop; her message is:

even if AI produces a fire hose of new mathematics we still need ways of thinking about this mathematics.

For this she recognizes that philosophers and social scientists have a handle on “ways of thinking” that have largely been missing from the discussion among mathematicians and (most) computer scientists.

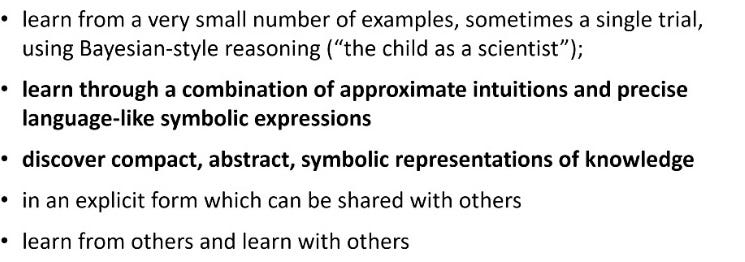

Dehaene asked whether AI can mimic human mathematical intuitions; as a neuroscientist his starting point is to understand the brain architecture that allows humans to do mathematics that other animals cannot. Current artificial neural networks are quite good, he claimed, at the items on this list:

but the brain “keeps the upper hand” (but for how much longer?) in ability to

Dehaene illustrated the state of contemporary thinking on these questions with a number of fascinating bar charts and pictures of illuminated brains, but it was up to moderator Koumoutsakos to ask the panelists to address specific questions from the audience relevant to this newsletter. There were no surprises in either the questions or the answers, however. Rather than try to present them synthetically, therefore, I’m just going to share my raw personal notes. Interested readers can just go back to the videos to clarify obscure points (and to view Dehaene’s illustrations).

Koumoutsakos question: Work on automatically verifying proofs, does anything tell us that AI can prove new theorems?

Dehaene: I'm more interested in the question: can AI discover a new meaningful mathematical fact, a new shape, etc (like platonic solids)?

Martin: Would you count protein folding? Once you start unpicking what is meant by "meaningful" there are examples of finding elaborate combinatorial objects.

Koumoutsakos question: is it possible for AI to come up with something humans can't?

Martin: “If you want it to come up with something that will make mathematicians go wow then you have to prime the mathematical community.”

Koumoutsakos question: Does the math network overlap with other networks that we don't associate with math?

Dehaene: A lot of links between math and space. People in the Amazon already make such links.

Koumoutsakos question: Picasso says good artists copy great artists, so is ChatGPT similar?

Dehaene: I'm impressed by the pace of progress but encoding by vectors isn't quite how meaning is encoded in the human brain (consider the square).

Martin: AI is training us. ChatGPT could write boiler plate text in grant proposals etc. So much of what we read has already been formatted in this way. What are people doing with languages other than English, which have been less "tuned" by AI and algorithms?

Koumoutsakos question: Do you think mathematical intuition can be programmed into a computer?

Martin: I think we'll keep redefining intuition. It's not the sort of thing I like to talk about in general terms. Different people mean different things by intuition. You can get computers to surprise you, but whether you call that intuition isn't clear.

Dehaene: The brain is a sort of information-processing machine. Intuition is information-processing and should be mimicked in a machine one day or another.3 There is a lot of architecture in the brain; will machines have to be close to the human brain to have mathematical intuition?

Koumoutsakos question: So how can we go about discovering the right machine?

Dehaene: I like to start with machines that know very little to begin with (people like Yann LeCun, AlphaGo)?

Koumoutsakos question: Will machines go about mathematics differently than humans as they already have in writing poems or making pictures?

Dehaene: I very much doubt this without some kind of visual representation, some kind of mental buffer for space and shape.

Martin: Such a sea of mathematics already, can imagine more mathematics coming from that; something about John Conway's extraordinary visual ability.

Day 3, Research Advances in the Mathematical Sciences (15 min talks)

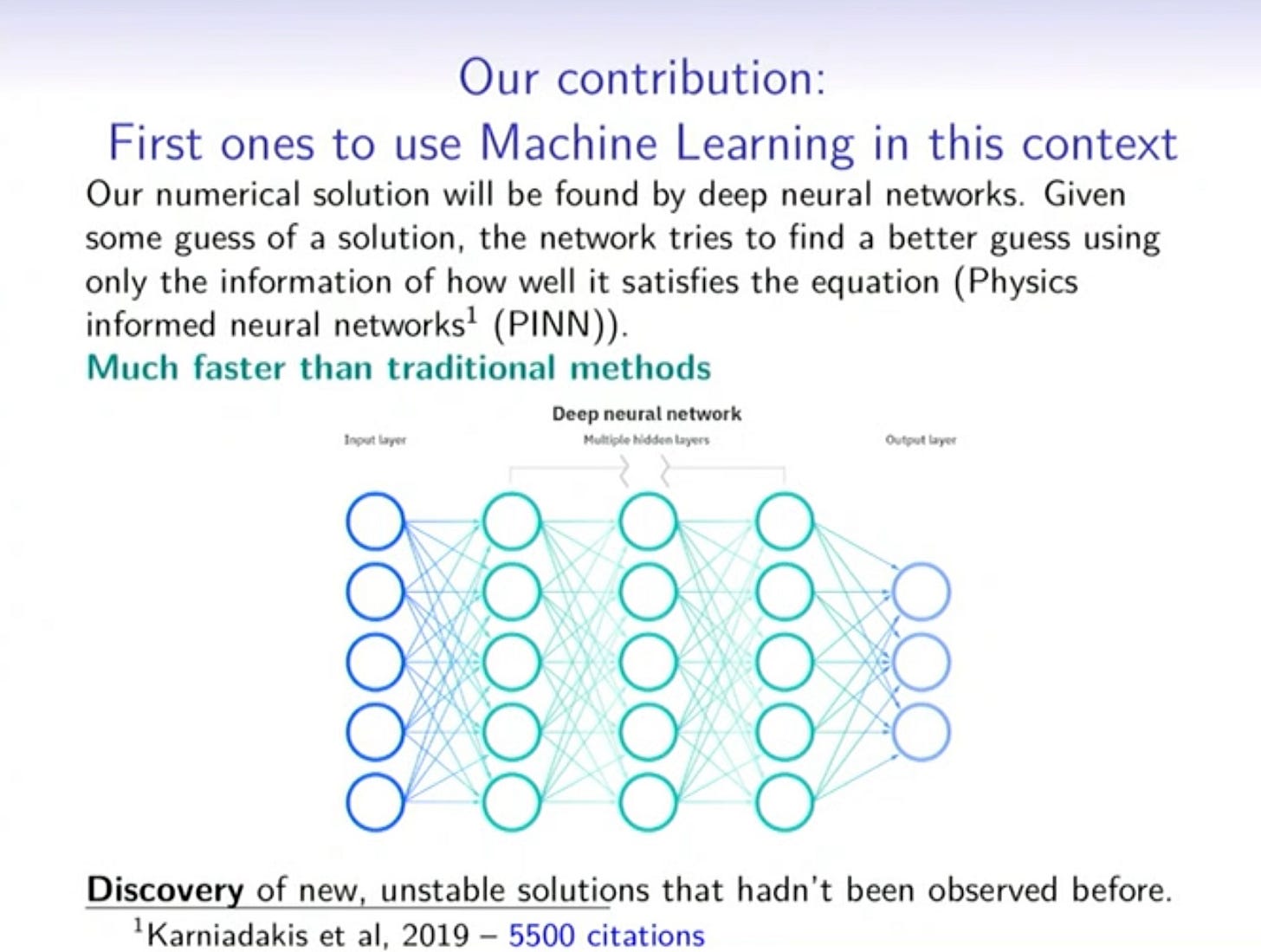

featured 15-minute talks by three mathematicians — Javier Gomez-Serrano, Adam Topaz, and Alex Kontorovich — moderated by Brendan Hassett. Three of the four participants work roughly in my area of mathematics; the exception is Gómez-Serrano, a specialist at Brown in non-linear partial differential equations.

Readers of Quanta Magazine may be familiar with Gómez-Serrano as one of the authors of an article, cited in 2022 by Jordana Cepelewicz, on the use of deep learning to “discover, for the first time, a smooth self-similar blow-up profile for” two fundamental equations in fluid mechanics. Quanta, readers of this site are well aware, has been a consistent booster of mechanization of mathematics in all its variants; nevertheless, a second article by Cepelewicz on computer-assisted proofs in fluid mechanics, features an exchange among experts on

philosophical questions about what a “proof” is, and what it will mean if the only viable way to solve such important questions going forward is with the help of computers…

In that exchange Gómez-Serrano was given the (next-to)4 last word:

when it comes to debates about the extent to which mathematicians should rely on computers, “it’s not that you need to pick a side,” Gómez-Serrano said. “[Hou and Chen’s] proof wouldn’t work without the analysis, and the proof wouldn’t work without the computer assistance. … I think the value is that people can speak the two languages.”

This was also Gómez-Serrano’s message at The Workshop. “It's important,” he said at the outset, “to train mathematicians in ML,” in keeping with his prediction that “it’s going to be useful in many branches of math.”

Going forward, Gómez-Serrano, who mentioned his brief stint working at Google, is “suggesting coexistence of ML with traditional methods.” For strategies like the one in the slide reproduced above it’s hard to disagree. This is the most convincing application I’ve seen of neural networks to mathematics, and I don’t think it raises any “philosophical questions” more significant than those in any computer simulation of differential equations, even though the methods are quite novel. The situation would be different, of course, if the machine provided the initial “guess”; Gómez-Serrano did call for (and predict) “more prominent use of computers as time goes by,” but he didn’t go so far as to anticipate ceding the initiative to the machine.

The presentation contained essentially nothing relevant to the scorecard, however. Only the slide at the end that mentioned “collaborating with a Tech company to obtain more resources” was a silent reminder that independent Access will increasingly be a problem, even for wealthy universities like Brown.

Topaz, an arithmetic geometry at the University of Alberta, was there to talk about his work on formalization; he was one of the chief collaborators on the successful completion of Scholze’s Liquid Tensor Experiment.5 His talk’s slogan:

Formal methods will act as a bridge between AI and mathematics.

Topaz identified several virtues — his “favorite parts” — of work on formalization: it is collaborative, asynchronous, and open. Contributors can focus on partial targets of formalization because the interactive proof assistant ensures consistency.6

After a brief illustration of Lean code, Topaz turned to the question: “How can AI help? Today?” His outline takes the opposite path of the “adversarial process” to which Kontorovich refers in his presentation — rather than inviting a juiced-up Lean-speaking LLM to riff on a question, then attempting to compile its output to eliminate hallucinations, Topaz proposes to ask the AI to attempt to replace occurrences of sorry in the Lean code by pieces of an ultimate proof.

Most of the scorecard’s points for this session were earned in Topaz’s last minute, in which he pointed to barriers: cost, infrastructure maintenance, and a rare acknowledgment (at 2:14:46) of the tension between the open culture of academia “as opposed to the profit-seeking culture of companies like OpenAI.” Nevertheless he insisted that

[W]e should implement AI infrastructure in ITPs right away.

His conclusion was labelled synergy, alluding to his belief that collaboration between AI and mathematics benefits both sides. Topaz sees two points “(among others) [that] make formalization interesting and scientifically worthwhile”:

“We refine and strive to understand the problem solving process itself.”

“We aspire to find the right definitions and abstractions.”

After one talk each on ML and formalization, it was Kontorovich’s turn to explain his “speculative” synthesis. What he calls the Holy Grail is the situation where “AI comes along and helps us solve the Riemann Hypothesis.”

DIGRESSION: I’ve been trying to explain why I really don’t understand why my colleagues continue to collapse human agency in this way, but I haven’t given up. I’ve promised to reply at some point this fall to this comment by AG, which expresses a similar vision; for now I’ll let it pass.

In a second Holy Grail, AI makes its own Definitions and Conjectures7 He admits this is in the mode of Science Fiction and turns to a more modest scenario, where AI assists in theorem proving. To make this possible Kontorovich proposes three Conjectures:

Conjecture 1: LLMs trained on language alone will not reason reliably at a high (professional math) level.

This, he says, is because high level mathematics, unlike language, can’t be captured by big data.8 He notes, however, that this Conjecture runs counter to ongoing research of “people [OpenAI, Google…] … putting a lot more resources than I have.”

Here Kontorovich misses an opportunity to gain points in Externalities by referring to the copyright infringement issue.

We have already encountered

Conjecture 2: The path to AI assisting in “reasoning” is through an adversarial process, likely involving Interactive Theorem Provers.

(the opposite of the process imagined by Topaz) and

Conjecture 3: The current rate-limiting step for AI mathematics is: producing orders of magnitude more lines of (high-quality) formalized mathematics.

(Five orders of magnitude, as I mentioned in the report on Commelin’s talk.)

Here Kontorovich misses another opportunity to gain points in Externalities by referring to energy and water consumption. Instead he observes that producing all those lines typically involves several branches of mathematics and is hard. The Thomas Bloom story is a reason for optimism but he cautions that “humans alone” will not increase the existing stock of formalized mathematics by a factor of 100000.

Only three items in the Q&A moderated by Hassett are really relevant here. In response to the question addressed to Topaz —

How does working in Lean [with its highly cooperative structure] align with incentive structure in mathematics? … How should the mathematical community adapt?

— Topaz said he had no good answer, and that this has to be addressed for formalized mathematics to become mainstream. Kontorovich added that the community should reward the work that young people are doing in this direction, but that the mechanisms don't yet exist. Instead of hoping for projects to come along with the support of people like Scholze, formalizers should “meet the math community where it already exists.” Mathlib is “the most reliable journal on the planet”; there is are complete records of who contributed what to publications there, and this should be rewarded. In particular, mathematicians should be aware that some of the best Lean work is going into computer science journals. Hassett continued the discussion, asking Gómez-Serrano what the community needs to do to make sure this scholarship is recognized; the answer was primarily that math departments have some “homework” to do, specifically to learn what CS conferences are like.

There were two questions on geometric visualization and intuition: one stressed its importance in mathematics, the other asked whether Euclidean geometry has been completely formalized, to eliminate its reliance on pictures. The apparent consensus among panelists — that proofs by pictures are intrinsically problematic — was more interesting than the specific answers.

Finally, Topaz made a very intriguing comment: “100-line proofs create hardware problems, the system is too slow. Automation can help.” I need to look into this!

Because this last panel was a dialogue among mathematicians, I feel obliged to grade it on the scorecard whose rules were explained in the first post in this series.

Context9 2/10 just because Topaz mentioned the “profit-seeking culture” of the tech industry.

Access10 5/10 of which 3 points for repeated discussions of the “incentive structure” and 2 more for the need to seek resources outside academia.

Externalities11 0/10. I consider this grade generous, given the repeated missed opportunities to say something about this.

Democracy12 2/10 for the incentive structure again.

Cluefulness13 0/10.

Who holds the interestingly named position of Senior Lecturer in Argumentation at the University of Dundee.

On one slide Martin went so far as to use the expression “stakeholder ecosystem.” See ChatGPT’s poem “In Praise of Stakeholders.”

Thus Dehaene reveals himself to be a partisan of the computational theory of mind.

The actual last word was spoken, ominously, by Rafael de la Llave:

…there’s a new game in town.

In case you are confused about the timeline: Scholze posted his challenge on the Xena blog in December 2020 (at which point I wrote an article, which Quanta rejected, correctly anticipating the reaction to its solution). The first announcement of success was posted by Scholze in June 2021; he wrote

While this challenge has not been completed yet, I am excited to announce that the Experiment has verified the entire part of the argument that I was unsure about.

Quanta did publish an article at this point, but ignored the announcement just over a year later, in which Topaz was cited as one of the main contributors, that the formalization had been completed.

Formalization holds no interest for me, but I would welcome an AI assistant — community-owned, of course — to relieve the headache of keeping notation consistent in my collaborations.

Interestingly (for fans of Siobhan Roberts’s biography of John Conway), he views this scenario as analogous to the unexpected discovery of “monstrous moonshine.”

Of course I strongly agree, and I refer readers to Gayatri Spivak’s insight that the digital will never capture the contingent.

Context: Attention to the deep motivations of governments and corporations.

Access: Access to necessary resources, exclusion, monopoly.

Externalities: Regulation, including climate and water considerations, personal data protection, and copyright infringement.

Democracy: Democratic decision-making, power relations.

Cluefulness: The opposite of cluelessness. Here it consists in acknowledging the lively ongoing conversations about AI in other disciplines

It is fascinating to read your opinion on this topic (although I admit to skipping some parts of your posts, as they are sometimes too intellectual for me; of course, I do this at my own peril). It is particularly interesting because I've met many of the actors in your posts. Clearly you feel that the computer will play an important role in the mathematics of the future, otherwise there would be no need for this blog. It took a while for me to understand what you mean by "central dogma", but after trying to explain it to a friend I think I understand now what you mean: The central dogma says that informal mathematics is deemed correct if and only if it corresponds to a valid formalization of it. Now, taken as a theorem, the central dogma might actually be true (and I believe it is). But of course, as a definition of the correctness of informal mathematics, it is not satisfactory, and as you pointed out, what does correspondence mean in the first place? I explained the mutilated chessboard problem to my girl-friend, and she understood why it is impossible to tile the mutilated board with dominoes. Without her knowing anything about mathematics or formal logic. So clearly, this is not a game, and it is real.

I believe I am currently the person in the world who understands the nature of logic best (I might be wrong about that though; my work hasn't been successfully peer-reviewed yet). It can be understood in terms of there being: 1) a mathematical universe; 2) operations on this mathematical universe; and 3) operators, which are just functionals on this mathematical universe, and provide a way for talking about operations. Truth is modelled as a value 𝖳 in the mathematical universe. All the fancy type theories you see in interactive theorem provers out there are just confusing complications of this simple model (saying stuff like this gets my papers rejected). Logic is really just a precise way of writing down your thoughts, and there is nothing mysterious about it, but in doing so, it is like making a deal with the devil: there can be many ways in which the precise contract can be fulfilled, not only the way you might think of.

My goal is to provide people with a software that is a "bicycle for their mathematical mind". Clearly something better than paper must be possible, and I believe formal logic is essential to it; as long as it doesn't feel like something to fight against beyond the intrinsic difficulty of the problem. Paper / LaTeX allows me to write down anything I want to, and logic must do the same. The advantage of software-enabled logic over paper is that logic actually "understands" what I am writing down, in the same way the devil would.

I feel you would actually be in favour of such a software that supports your thinking instead of pressing it onto preformed rails, although your blog seems to be directed against ITP (I think you claim neutrality or indifference somewhere, but if that is so, why write this blog). Am I wrong?

Regarding the digression on "collapsing the human agency":

Insofar as the eventual arrival of meta-maticians is concerned (and I was careful in going no further than "submitting a competitive paper", which is a natural next step after "working through all of the exercises in the Springer-Verlag Graduate Texts in Mathematics series"; "competitive doctoral thesis" is another way of putting it), my view is that "For us, there is only the trying. The rest is not our business." Where we (human mathematicians) do have agency is in making sense of the imminent encounter and reacting accordingly.