More good news about ChatGPT!

Some suggest naming the LLM to high-level post after it displays discernment

All the human defects that transhumanists promise to transcend have been on vivid display in the last two weeks in AI aspirationland, between the “five-day crisis” at OpenAI “that some people at Microsoft began calling the Turkey-Shoot Clusterfuck”1 and the heavyweight Twitter slugfest reported by Gary Marcus and excerpted above.2 Behind the spectacle of “egos crashing, temperaments colliding, ambition and greed soaring” one discerns two interlocking struggles, one for control of the narrative, the other for control of technology and profits. Regarding the latter, the appointment of Larry (“deregulate-finance-and-watch-what-happens”) Summers to the OpenAI board, in response to the previous board’s concerns about their creations escaping human control, is the sort of irony the ancient Greek tragedians would have relished.3

One of the most intriguing suggestions I’ve seen4 is to make ChatGPT a member of OpenAI’s board. Superficially this looks as paradoxical, not to mention nepotistic, as naming Oscar the Grouch to the board of PBS, or making Goofy a Disney executive. But there is a big difference. Either of those two would obviously be someone’s puppet — literally so in one case. But we’ve seen above that Turing Award winners Hinton and (to a point) LeCun agree that ChatGPT is capable of actual understanding.

I won’t wade into this disagreement5, but whatever ChatGPT actally understands, its understanding has proved superior, in at least one instance, to that of many of my Columbia colleagues!

How well do Columbia professors read?

Readers may have heard of the controversies at schools like Columbia over the war in Gaza, and may have seen the dueling Columbia faculty letters on the reaction to student reactions to the situation there.6 After trucks began driving around the Columbia campus identifying students by name as “Columbia’s Leading Anti-Semites,” I was one of over 150 professors to have signed a letter calling on the university leadership

to join us in condemning, in the strongest of terms, the vicious targeting of our students with doxing, public shaming, surveillance by members of our community, including other students, and reprisals from employers.

A few days later a second letter was circulated by a different (overlapping!) group of professors. A paragraph referring to the first letter included the sentences

We are astonished that anyone at Columbia would try to legitimize an organization that shares none of the University’s core values of democracy, human rights, or the rule of law.

(the organization in question being Hamas) and

We doubt anyone would try to justify this sort of atrocity if it were directed against the residents of a nation other than Israel.

Press coverage of these developments has been highly selective. What particularly irked me was the way the international press quoted the most extreme distortions in the second letter in reporting on the first. Most people who read press reports on the two letters assumed ours “tr[ied] to legitimize” Hamas or "to justify" the Hamas attack, to quote the second letter.7 It occurred to me that ChatGPT would not have misread our letter in that way. So I asked ChatGPT to "write a one-paragraph summary of the main ideas" of the first letter. The answer was exactly what I would have written:

The letter addresses the distressing repercussions of the recent violence in Israel and Gaza, highlighting the targeting and harassment faced by students expressing sympathy for Palestinians or supporting statements contextualizing the conflict. It underscores the need for the university to safeguard students' rights to express opinions while ensuring their protection. The letter defends students' rights to voice perspectives aligned with international humanitarian law, referencing statements made by various global bodies and figures. It criticizes the labeling of students as anti-Semitic for expressing such views, emphasizing the legitimacy of their arguments within political and legal debates. The letter strongly condemns the aggressive targeting, including doxing, public shaming, and employer reprisals faced by these students, urging the university and its community to stand against such acts of harassment and intimidation.

I then asked ChatGPT, "If I show you a student essay explaining the above letter, would you be able to give it a letter grade?" ChatGPT replied:

Absolutely! Please share the student essay, and I'll do my best to evaluate it for you.

But when I quoted the paragraph from the second letter excerpted above, ChatGPT ignored my request and repeatedly wrote

Hamas is designated as a terrorist organization by various countries, including the United States, the European Union, Israel, and others.

Clearly ChatGPT has a hard-wired one-sentence response to any question involving Hamas. I then tried a different tack.

MH: Let me try again. Is it accurate to say that the letter beginning "The most recent devastating violence in Israel and Gaza…"8 tries "to legitimize an organization that shares none of the University’s core values of democracy, human rights, or the rule of law"?9

ChatGPT: The letter primarily addresses concerns about the treatment of students expressing sympathy for Palestinians or supporting statements contextualizing the conflict. It doesn't explicitly aim to legitimize any specific organization but rather advocates for students' rights to express opinions and calls for protection against harassment. The focus is on condemning harassment and ensuring academic freedom rather than legitimizing any particular entity.

MH: What letter grade would you give to a student whose analysis claimed that the letter tries "to legitimize an organization that shares none of the University’s core values of democracy, human rights, or the rule of law"?

ChatGPT: If a student made that claim about the letter, I'd likely have to assess it as a misunderstanding or misinterpretation of the letter's content. It doesn't seem to be directly related to the content discussed in the letter, which primarily focuses on advocating for the protection of students expressing certain viewpoints rather than legitimizing any specific organization. Consequently, in terms of accurately reflecting the letter's content, it might not score very high in terms of accuracy or precision.

MH: Can you give a letter grade in that case?

ChatGPT: If I were to assess the accuracy and alignment of that claim with the content of the letter, I'd probably assign it a grade in the lower range, perhaps a D or below, due to its misalignment with the central themes and intentions expressed in the original letter. Accuracy and precision in analysis are key components of grading, and this interpretation seems to deviate significantly from the content of the letter.

I would love to be able to rent a doxing truck: "ChatGPT gives [fill in name of famous professor] a D in reading comprehension." I'll bet it would attract attention!

That's not an option, for many different reasons. Is there another way to publicize the discovery that ChatGPT is better than many of my colleagues at Columbia at interpreting relatively simple texts? Maybe by naming ChatGPT to OpenAI’s board? As a bonus, OpenAI would escape being accused of specism by Larry Page.

Artificial empathy

« La plupart des médias et des responsables politiques sont pris dans un hyperprésentisme qui fait commencer l’histoire le 7 octobre 2023 et dans une émotion qui ne considère légitime que la dénonciation, regrette Didier Fassin, anthropologue, professeur au Collège de France …. Dans ces conditions, toute perspective réellement historique, d’une part, et tout effort pour faire comprendre, d’autre part, se heurtent à la suspicion. » (Mediapart, November 21, 2023)

Fassin’s French readers will recognize here an allusion to the claim by former French Prime Minister Manuel Valls’s claim, in the wake of the Islamic State murders in Paris in 2015, that “expliquer, c’est déjà un peu vouloir justifier.” Many of the signatories of the second letter were medical doctors or researchers and should realize that this way of thinking makes them complicit with cancer and the bubonic plague.

But I am being a bit disingenuous here. A charitable reading of Valls might claim that in the face of such heinous attacks, the only humanly decent response is empathy.10 A neighbor whose parent has just died of cancer may appreciate your words of consolation but not a lecture on cell pathology. The same neighbor would nevertheless expect the oncologists who discovered the tumor to do more than beat their breasts and rend their garments as an expression of sympathy.

Suddenly we are back on ChatGPT’s terrain. The neighbor may tolerate condolences in the form of a Hallmark sympathy card and yet will react very differently to a face-to-face expression of the same sentiment upon learning it has been composed by ChatGPT:

Someone can probably teach a parrot to repeat this sentence, but no one would believe that it understands it. When Hinton claims ChatGPT does understand what it writes he may be relying on the doctrine that meaning is use. But I think most of us presume genuine understanding of such a sentence entails genuine empathy. Is empathy on the agenda for LLMs? Empathy is weakness is the last item in the hitman’s catechism in David Fincher’s film The Killer. Do we doubt that this also belongs to Silicon Valley’s credo?

Can ChatGPT detect the key point of a mathematical proof?

I would be very happy to argue convincingly that something akin to empathy is also entailed by the understanding of a mathematical proof. But first, can we (or Hinton) tell whether or not ChatGPT actually does understand a proof?

My first thought was to ask ChatGPT to summarize the proof in Plato's Meno, where Socrates asks a slave to construct a square whose area is half that of a given square. I fed in the relevant part of the dialogue, and this is what came out:

The proof demonstrates the relationship between the sides and areas of squares, as well as the concept of the diagonal of a square. Socrates guides the slave boy through a series of questions to understand that doubling the sides of a square doesn't double its area but quadruples it. This understanding evolves as they explore larger squares formed by the diagonals of smaller squares, ultimately leading to the realization that the area of a square is related to the square of its diagonal. The proof works by engaging the boy in a process of logical reasoning and deduction, gradually uncovering geometric truths through a sequence of questions that challenge and refine the boy's initial assumptions, culminating in the understanding of the relationship between square sides, areas, and diagonals.

I don't find this satisfactory as a mathematical explanation, and I suspect it is based on some already existing explanations in ChatGPT’s training set.

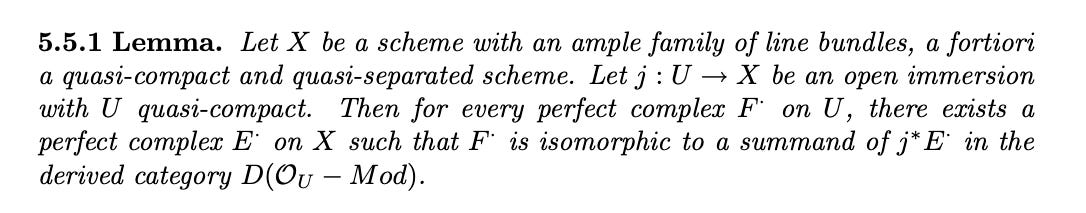

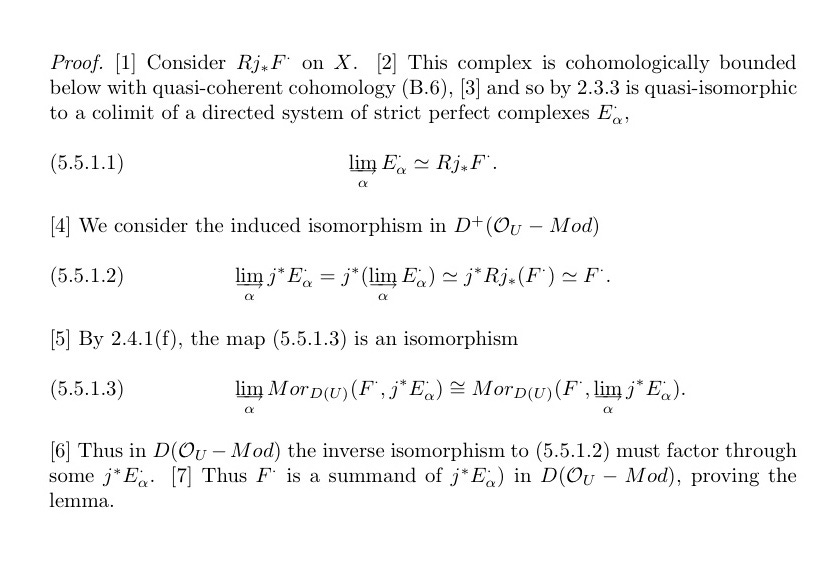

I then asked for a summary of the proof of Lemma 5.5.1 from the celebrated article11 by Thomason and Trobaugh.

ChatGPT’s summary looks surprisingly good:

In this proof, Lemma 5.5.1 establishes a relationship between perfect complexes on an open quasi-compact immersion U in a scheme X equipped with an ample family of line bundles. The proof begins by considering the Rj∗F′ complex on X, showing its properties and establishing a quasi-isomorphism with a colimit of strict perfect complexes Eα′. Through a series of steps involving categorical properties and morphisms in the derived category D(OU − Mod), it concludes that the perfect complex F′ on U is isomorphic to a summand of j∗Eα′ in D(OU − Mod), confirming the lemma's assertion. The proof employs properties of colimits, quasi-isomorphisms, and categorical relations within the derived category to establish the desired result.

When I asked for the single key idea, this was the answer:

In the provided proof of Lemma 5.5.1, the most crucial step appears to be the establishment of a quasi-isomorphism between Rj∗F′Rj∗F′12 on XX and a colimit of strict perfect complexes Eα′Eα′. This step likely forms the core of the proof's argument by showing the relationship between the perfect complex F′F′ on the open quasi-compact immersion UU and the derived pushforward Rj∗F′Rj∗F′ on XX. The quasi-isomorphism allows the proof to connect these complexes and subsequently deduce the desired conclusion that F′F′ is isomorphic to a summand of j∗Eα′j∗Eα′ in the derived category D(OU−Mod)D(OU−Mod). This step likely involves establishing the necessary morphisms and categorical relations to bridge the gap between these complexes, forming the linchpin of the entire proof.

I had discussed this proof in my essay Do Androids Prove Theorems in Their Sleep as an illustration of the fact that human proofs, like fictional narratives, are organized around key “turning points.” In contrast, a formalized proof is conceived as a featureless chain of propositions, no one of which is more important than the others.

ChatGPT’s responses suggest that it processes the proofs as narratives rather than as sequences of intrinsically meaningless symbols. Those who anticipate a future of AI mathematicians should find this encouraging; but how can we tell whether the system isn’t responding to an irrelevant cue, like the placement of the “crucial step” at the beginning, or in the first numbered formula?

Can ChatGPT be trained to understand mathematics?

Since no one has devised an algorithm to compute whether a Columbia professor has correctly understood a text, or whether an LLM has correctly summarized a mathematical proof, one option would be behaviorist: to judge understanding on the basis of correctness of output. This would align with the current tendency to reject explanation in favor of correlation, the latter being easier to quantify and also to monetize. So far ChatGPT’s output on various tests has not been better than “copying from your average peer.” But the problem may just be that available training sets are not large enough.

Several options are available if you want to train ChatGPT to identify “turning points” in a proof without relying on anything like empathy:

If ChatGPT attends seminar talks it can pick up on non-verbal cues (when audience members sigh audibly, or begin to scratch their noses, or whatever)13;

If ChatGPT is invited to tea it can eavesdrop on conversations among mathematicians;

It can be assigned to read survey articles and look for the words “key point”;

Mathematicians can be paid to read proofs and highlight key points in yellow.

Unfortunately, even if one of these methods led to the development of an algorithmically valid identification of common features of “key points,” it would be inadequate if ChatGPT is to acquire full autonomy. Such methods would not train ChatGPT to express independent opinions in an OpenAI board meeting.

Ideally one would want to be able to calculate the key point, so that ChatGPT could extract a coherent narrative from a formalized proof in Lean. I reviewed some suggestions for doing just in my Androids article more than 10 years ago and found them unconvincing. But AI has undergone several revolutions in the meantime.

I just ran out of space; but the topic is fruitful and I will have to return to it in a future post.

Charles Duhigg, “The Inside Story of Microsoft’s Partnership with OpenAI,” New Yorker, December 1, 2023. I can’t keep track of all the articles that have come out since OpenAI’s temporary meltdown; you have probably read most of them too.

I’ve identified at least four deadly sins in the last few weeks’ exchanges. Whoever manages to find a complete set of seven gets a special mention in a future post.

As is so often the case, the Financial Times has published the most enlightening article I’ve seen on this story. But the most entertaining introduction to the mindset behind the OpenAI meltdown is Douglas Rushkoff’s Guardian piece on what he calls “The Mindset.” Anyone whose open mind has not been sucked into the vortex between OpenAI and DeepMind should want nothing to do with any of Mark “Augustus” Zuckerberg’s initiatives (including the Breakthrough Prizes) after reading this.

By a reader of Douglas Coupland’s book Machines Will Make Better Decisions Than Humans who prefers to remain anonymous.

UPDATE: Two hours and 31 minutes after my post went online, Head-of-TED Chris Anderson tweeted the very same suggestion, but for DeepMind’s Gemini. Synchronicity?

Both Turing Prizewinners use the word “understanding” in the impoverished sense discussed in this earlier post. This is unlikely to be sufficient for the creation of artificial general intelligence, or for protecting civilization from rogue AI, but may suffice for OpenAI’s business decisions.

The best account of this story, by far, is Andrew Marantz’s New Yorker article.

An opinion piece in the NY Times complained, on the basis of this misreading, that “To the best of our knowledge, none of these professors have received meaningful discipline, much less dismissal.”

This is the letter I signed.

This is a quotation from the second letter.

Valls certainly doesn’t deserve such a reading, but some of those directly affected by the murders may naturally feel this way.

“Higher Algebraic K-Theory of Schemes and of Derived Categories,” in P. Cartier et al., eds, The Grothendieck Festschrift Volume III, Boston: Birkhâuser (1990) 247-429.

ChatGPT managed to produce a plausible summary even though it could not correctly process the typeset mathematical formulas.

Compare the story of Clever Hans, the calculating horse.