Don't panic over mathematicians' "exposure" to LLMs

Reading between and beyond the lines of a NY Times report

A few weeks ago reader AG directed my attention to a report in the New York Times that researchers had found that the “job” of “mathematician” is the one “most exposed” to being replaced, or at least assisted, by large language models. More alarming, this conclusion was reached independently by human researchers and ChatGPT. Here is the key passage.

The researchers asked an advanced model of ChatGPT to analyze the O*Net data and determine which tasks large language models could do. It found that 86 jobs were entirely exposed (meaning every task could be assisted by the tool). The human researchers said 15 jobs were. The job that both the humans and the A.I. agreed was most exposed was mathematician.

(“In Reversal Because of A.I., Office Jobs Are Now More at Risk,” New York Times, August 24, 2023, my emphasis)

I replied in the comments section with a comment from the original article — entitled “GPTs are GPTs1: An Early Look at the Labor Market Impact Potential of Large Language Models” — a brief description of how the database defines the “job” of “mathematician,” and a promise to return to the question. Today’s post keeps that promise.

What is the “job” called “mathematician”?

Daniel Rock is the sole academic among the human researchers in the primary study cited in the NY Times article. This is what I found on Rock’s home page at the University of Pennsylvania’s Wharton School:

I'm an Assistant Professor in the Operations, Information, and Decisions (OID) department at the Wharton School of the University of Pennsylvania. I'm one of the affiliated faculty members at Wharton AI for Business. Broadly, my research is centered on the economic impact of digital technologies.… I'm also a Digital Fellow at both the MIT Initiative on the Digital Economy and the Stanford HAI Digital Economy Lab.

All very impressive, but Rock’s entire education and professional career has been in schools of management; his CV gives no sign of any training in the areas of the social sciences that would prepare him even to formulate coherent questions about what mathematicians do. The other three humans list ChatGPT’s creator OpenAI — now in a bromance, or “open relationship,” with Microsoft — as their affiliation, and are therefore presumably even more in the dark about our profession (and many others).

Since it’s obviously impossible for four humans to develop their own definitions for a comparative study of hundreds of distinct job titles, they chose to make use of the O*NET database, which bills itself as

your tool for career exploration and job analysis!

and which

is developed under the sponsorship of the U.S. Department of Labor/Employment and Training Administration (USDOL/ETA) through a grant to the North Carolina Department of Commerce.

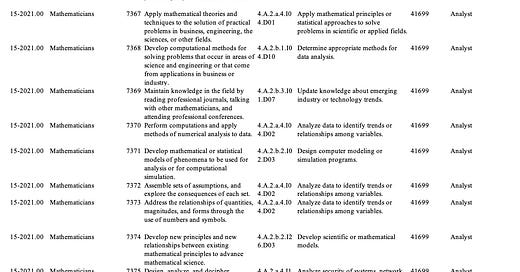

Each job title can consist in dozens of distinct tasks. Eleven tasks collectively define the “job” of “mathematician” for both human and artificial researchers:

Most of these are plausible, as I wrote in my reply to AG, and a few are familiar to academic mathematicians, notably item 7369. The DWA Titles suggest a bias toward identifying mathematics with applied mathematics. This is both realistic as a description of where mathematical scientists are employed and a reminder that AG’s reading of the report as a warning about the future of human mathematical research is probably misplaced.

There are also glaring omissions. It has to be assumed in particular that we cease to be mathematicians not only when we prepare and teach our classes, or when we fulfill our administrative responsibilities at the level of the department or the university, but also when we participate in the training of future members of the profession, either formally as thesis advisors or informally in any number of ways. Active participation in professional organizations, or organization of conferences, or editing journals, or responding to requests for letters of recommendation or for evaluation of candidates for prizes, or writing a manifesto about the ethical responsibilities of mathematicians: all of that is incidental to the “job.” Serving on the AMS Advisory Group on Artificial Intelligence and the Mathematical Community, or submitting an opinion to the advisory group? Not part of the “job!” This very minute, as I write about the “exposure” of our profession to AI, I am certainly doing something, but whatever it is, it’s not being a mathematician.

Mathematical research is the part of our work whose appropriation by machines is seen as a potential form of identity theft. In his Quanta video (155k views five days after its publication) Andrew Granville says

We value our work by the profundity of proofs, but if we can rely on a machine to get all the details right or most of the details right, then who are we? What is our training? What is it that we value? So when we don’t need to think about proof, then we’re not going to be trained to think about proof, so who are we going to become?

All this identity gets squeezed into 7374: “develop new principles and new relationships between existing mathematical principles to advance mathematical science.” Most of us, I believe, would find this an awkward way to explain our vocation, and the DWA Title — “develop scientific or mathematical models” — is entirely indifferent to proof. Indeed, a full search of the O*NET database finds the words proofread and waterproof numerous times but the word proof itself is absent. At best proof has to be understood as part of the explication of the word advance in 7374’s description; but that word is doing too much work to translate Granville’s question for the benefit of either ChatGPT or the human “annotators,” whose assignment is to evaluate the likelihood of LLM exposure — explanation to follow — of each Task and DWA. The authors admit as much, and not only with regard to mathematics:

A fundamental limitation of our approach lies in the subjectivity of the labeling. In our study, we employ annotators who are familiar with LLM capabilities. However, this group is not occupationally diverse, potentially leading to biased judgments regarding LLMs’ reliability and effectiveness in performing tasks within unfamiliar occupations. We acknowledge that obtaining high-quality labels for each task in an occupation requires workers engaged in those occupations or, at a minimum, possessing in-depth knowledge of the diverse tasks within those occupations. This represents an important area for future work in validating these results.2

The NY Times reporter was not prepared to wait to see the results validated by “future work.” The article did acknowledge, in the words of one of the authors, that automating the “tasks” isn’t the same as automating the “job”:

“There’s a misconception that exposure is necessarily a bad thing,” [one of the co-authors] said. After reading descriptions of every occupation for the study, she and her colleagues learned “an important lesson,” she said: “There’s no way a model is ever going to do all of this.”

But the Times reporter didn’t question the identification of “jobs,” including ours, as bundles of “Tasks,” and therefore saw no need to evaluate the “quality” of “labels for each task in an occupation.”

Thinking of mathematics as a way of being human, a perspective I have been promoting, is a helpful way to avoid confounding the vocation of mathematics with its concrete manifestation in the chaos of the employment market. The formulation also — a bit too neatly — postpones discussion of replacement of human mathematicians by machines until the latter are accepted by consensus into the community of humans (with full voting rights and so on). I will be devoting a future post to some of the obvious problems with this formulation; for now it suffices to note the challenges of using the O*NET database to explore “ways of being human.”

What it means to be "exposed"

In addition to the “GPTs are GPTs” article, the Times journalist cites studies by Pew Research Center and Goldman Sachs3, both of which also used the O*NET database to estimate “exposure” of jobs to AI automation. Thus we read that

Extrapolating our estimates globally suggests that generative AI could expose the equivalent of 300mn full-time jobs to automation.

in the Goldman Sachs article, while the Pew study estimates that

In 2022, 19% of American workers were in jobs that are the most exposed to AI.

An analysis that produces such specific numerical estimates must naturally rely on measurable properties of the population under investigation. The Pew article’s explanation is admirable in its brevity.

In our analysis, jobs are considered more exposed to artificial intelligence if AI can either perform their most important activities entirely or help with them.

Rock and the OpenAI researchers

define exposure as a measure of whether access to an LLM or LLM-powered system would reduce the time required for a human to perform a specific DWA or complete a task by at least 50 percent… while maintaining consistent quality.

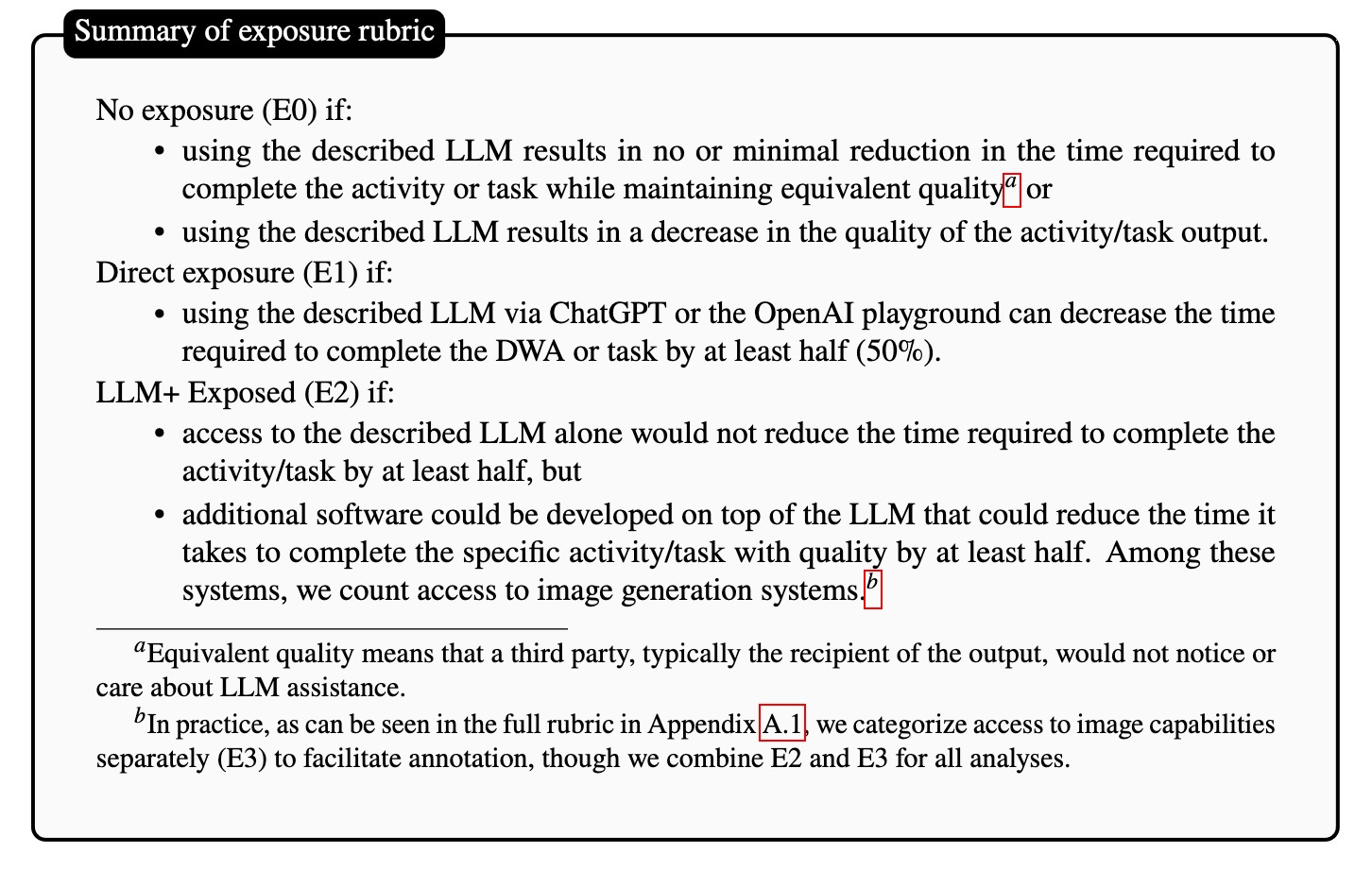

To this end they develop an elaborate tripartite “exposure rubric”:

and introduce three statistical measures of exposure, denoted by the Greek letters α, β, ζ that are just sums of E1 with multiples (0, .5, and 1 respectively) of E2. The details make for attractive graphics but it’s enough to quote how the authors summarize their conclusions in the introduction.

We define exposure as a proxy for potential economic impact without distinguishing between labor-augmenting or labor-displacing effects. We employ human annotators and GPT-4 itself as a classifier to apply this rubric to occupational data in the U.S. economy, primarily sourced from the O*NET database.… This exposure measure reflects an estimate of the technical capacity to make human labor more efficient; however, social, economic, regulatory, and other determinants imply that technical feasibility does not guarantee labor productivity or automation outcomes. Our analysis indicates that approximately 19% of jobs have at least 50% of their tasks exposed when considering both current model capabilities and anticipated tools built upon them. Human assessments suggest that only 3% of U.S. workers have over half of their tasks exposed to LLMs when considering existing language and code capabilities without additional software or modalities. Accounting for other generative models and complementary technologies, our human estimates indicate that up to 49% of workers could have half or more of their tasks exposed to LLMs.4

The authors caution that

It is unclear to what extent occupations can be entirely broken down into tasks … If indeed, the task-based breakdown is not a valid representation of how most work in an occupation is performed, our exposure analysis would largely be invalidated.

but nevertheless confidently announce that humans “labeled 15 occupations as fully exposed,” and list Mathematicians, Tax Preparers, Financial Quantitative Analysts, Writers and Authors, and Web and Digital Interface Designers among them; the LLM saw Mathematicians as fully exposed, just above Correspondence Clerks and Blockchain Engineers.

One wonders: what is the basis for this confidence?

We obtained human annotations by applying the rubric to each O*NET Detailed Worker Activity (DWA) and a subset of all O*NET tasks and then aggregated those DWA and task scores at the task and occupation levels. The authors personally labeled a large sample of tasks and DWAs and enlisted experienced human annotators who have reviewed GPT-3, GPT-3.5 and GPT-4 outputs as part of OpenAI’s alignment work.

At no point is it explained how one “applies the rubric,” nor when a human, not to mention GPT-4, is justified in choosing a label. We’ve already seen that the authors acknowledge “the subjectivity of the labeling.” Does that mean they are just guessing that the time necessary for all of the mathematicians “tasks” — including “reading professional journals, talking with other mathematicians, and attending professional conferences” — can be reduced by 50% with the help of LLMs?5

The impression that the labeling is not merely subjective but is in fact incoherent when one reads in a section devoted to “Skill importance” that

Our findings indicate that the importance of science and critical thinking skills are strongly negatively associated with exposure, suggesting that occupations requiring these skills are less likely to be impacted by current LLMs. Conversely, programming and writing skills show a strong positive association with exposure, implying that occupations involving these skills are more susceptible to being influenced by LLMs.

If I’m reading their Table 5 properly, mathematics — is this a science or a critical thinking skill? —turns out to be poorly associated with LLMs under measures α and β. The authors don’t comment on the apparent inconsistency of this result with the 100% “exposure” of mathematics reported by both human and LLM annotators. I’m inclined to conclude that the study says nothing meaningful at all about mathematics, and to advise AG not to panic.

So why is this research dignified by a report in the NY Times?

The hook is contained in the title of the Times article — “Office Jobs Are Now More at Risk” — and in its first two paragraphs:

The American workers who have had their careers upended by automation in recent decades have largely been less educated, especially men working in manufacturing.

But the new kind of automation … like ChatGPT and Google’s Bard … is changing that. …The jobs most exposed to automation now are office jobs, those that require more cognitive skills, creativity and high levels of education.

The authors, I suspect, would have detected a high correlation between reading the NY Times and exposure to the “new kind of automation.” Still, it’s a mystery why the Times published their article in late August when the OpenAI and Goldman Sachs studies were released in March.6

As one might expect, “Goldman Sachs Global Investment Research” is responsible for the quantitative material in their report; we read

Management teams of publicly-traded corporations increasingly cite AI in earnings calls—and at a rapidly increasing rate—and these indications of interest predict large increases in capital investment at the company level.

In contrast, the Times journalists stress the perspective of (white collar) workers rather than management: they write that

OpenAI has a business interest in promoting its technology as a boon to workers

The “boon,” however, turns out to be primarily an increase in productivity: the researchers explain that their “exposure measure”

reflects an estimate of the technical capacity to make human labor more efficient.7

The word “productivity” appears 50 times in the Goldman Sachs report:

The combination of significant labor cost savings, new job creation, and higher productivity for non-displaced workers raises the possibility of a productivity boom that raises economic growth substantially, although the timing of such a boom is hard to predict.… we estimate that AI could eventually increase annual global GDP by 7%.

The deep motivations of the Goldman Sachs universe are easy enough to detect in that short passage. Note in particular the “significant labor cost savings” at the higher end of the income scale. Readers are welcome to speculate about what deeply motivated the Times to publish their own account of this research. As for mathematicians: time will tell whether or not we have a reason to panic about AI, but we can safely ignore the conclusions reported in the Times article.

A pun on two expressions represented by the acronym GPT. The former is “generative pre-trained transformer” and I’ll let the reader guess the latter.

“GPTs are GPTs,” section 3.4.1.

The Goldman Sachs report saw 100% of jobs in “computer and mathematical” as “likely to be complemented by generative AI, and 29% of employment in the same group as “exposed to automation by AI.” The Pew report only mentions mathematics in the group of jobs including “critical thinking, writing, science and mathematics” whose reliance on analytical skills exposes them to automation.

"GPTs are GPTs,” section 1.

The Pew report includes a 3-page insert (pp. 10-12 here) entitled “How we determined the degree to which jobs are exposed to artificial intelligence.” The key sentence seems to be “We used our collective judgment to designate each activity as having high, medium or low exposure to AI."

The Pew study came out in late July.

“GPTs are GPTs,” section 1.

I am an 10th grader toiling till 2 in the dark of the night to understand things like convexity, elliptic curves & Abel-Ruffini theorem. Is all that useless? Is developing ingenuity unnecessary? This AI crap has been source of an astounding existential crisis, I seem to have no will to go on, Granville's video ended almost all hope, THIS SUCKS. Now, I do, still possess an immense desire to learn math, yet the possibility of proving hard theorems is too seductive for me to leave. Now my question is what the heck should I learn? What is important? Should I content myself with the passive nature of post-AI mathematics? Or perhaps retreat into a forest and do mathematics alone? My concern is not whether mathematicians are replaced by AI in my lifetime, but whether mathematicians can be replaced at all.

Thanks Michael, I'm a long time reader, first time poster. That was a great read.